YouTube, with its vast library of millions of videos, stands as a premium data source for video content analysis. Scraping YouTube—whether extracting videos, shorts, playlists, or other video data—provides access to crucial insights into trending video topics and key performance metrics, such as view counts and audience engagement. This data empowers businesses to benchmark their performance and make well-informed project decisions.

In today’s YouTube web scraping tutorial, you’ll learn how to:

- Use Python and the yt-dlp library to extract YouTube video metadata and download the videos

- Export this vital information into a CSV file

- Use ScraperAPI to bypass YouTube’s anti-scraping measures effectively and gather more comprehensive data

Get ready to enhance your data scraping skills and take advantage of the wealth of information available on YouTube!

TL;DR: Full YouTube Web Scraper Code

For those familiar with web scraping or automating tasks in Python, this script provides a comprehensive guide on scraping various types of YouTube data.

Here’s a quick summary of the code’s functionalities:

- Downloading a YouTube Video – Uses yt-dlp to download a video from a specified URL.

- Extracting YouTube Comments – Extracts comments from a YouTube video using yt-dlp.

- Extracting Metadata – Retrieves metadata (e.g., title, width, height, language, channel, likes) from a YouTube video without downloading it.

- Scraping Channel Information – Uses requests and BeautifulSoup to scrape a YouTube channel’s “About” section, extracting the channel name and description.

import requests

from yt_dlp import YoutubeDL

from bs4 import BeautifulSoup

## Downloading a YouTube Video

def download_video(video_url):

opts = {}

with YoutubeDL(opts) as yt:

yt.download([video_url])

print(f"Downloaded video: {video_url}")

## Extracting YouTube Comments

def extract_comments(video_url):

opts = {"getcomments": True}

with YoutubeDL(opts) as yt:

info = yt.extract_info(video_url, download=False)

comments = info["comments"]

thread_count = info["comment_count"]

print("Number of threads: {}".format(thread_count))

for comment in comments:

print(comment['text'])

## Extracting Metadata

def extract_metadata(video_url):

opts = {}

with YoutubeDL(opts) as yt:

info = yt.extract_info(video_url, download=False)

data = {

"URL": video_url,

"Title": info.get("title"),

"Width": info.get("width"),

"Height": info.get("height"),

"Language": info.get("language"),

"Channel": info.get("channel"),

"Likes": info.get("like_count")

}

print("Metadata:", data)

return data

## Scraping Channel Information

def scrape_channel_info(channel_url, api_key):

params = {

'api_key': api_key,

'url': channel_url,

'render': 'true'

}

response = requests.get('https://api.scraperapi.com', params=params)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

channel_name = soup.find('yt-formatted-string', {'id': 'text', "class":"style-scope ytd-channel-name"})

channel_desc = soup.find('div', {'id': 'wrapper', "class":"style-scope ytd-channel-tagline-renderer"})

if channel_name and channel_desc:

channel_info = {

"channel_name": channel_name.text.strip(),

"channel_desc": channel_desc.text.strip(),

}

print("Channel Info:", channel_info)

return channel_info

else:

print("Failed to retrieve channel info")

else:

print("Failed to retrieve the page:", response.status_code)

## Example Usage

if __name__ == "__main__":

# Download a video

video_url = "ANY_YOUTUBE_VIDEO_URL"

download_video(video_url)

# Extract comments

video_url_for_comments = "https://www.youtube.com/watch?v=hzXabRASYs8"

extract_comments(video_url_for_comments)

# Extract metadata

video_url_for_metadata = "ANY_YOUTUBE_VIDEO_URL"

extract_metadata(video_url_for_metadata)

# Scrape channel information

api_key = 'YOUR_API_KEY'

channel_url = 'https://www.youtube.com/@scraperapi/about'

scrape_channel_info(channel_url, api_key)

This code provides a comprehensive way to scrape and analyze various types of YouTube data using Python. To get started, replace the placeholder values (e.g., YOUR_API_KEY, ANY_YOUTUBE_VIDEO_URL) with your actual data.

Interested in diving deeper into scraping YouTube? Continue reading!

Scraping YouTube Data Step-by-Step

Project Requirements for Scraping YouTube Videos and Data

Here are the tools and libraries you’ll need for this project:

- Python 3.8 or newer – Make sure you have the latest version installed

- yt-dlp – For downloading YouTube videos

- ScraperAPI – To help with web scraping without getting blocked

- requests – A simple HTTP library for Python

- BeautifulSoup4 – For parsing HTML and extracting the data you need

- Json – For handling JSON data

Step 1: Setup Your Project

Let’s start by setting up your project environment. First, create a new directory to keep everything organized and navigate into it.

Open your terminal and run these commands:

mkdir youtube-scraper

cd youtube-scraper

Ensure you have Python installed on your system—Python 3.8 or newer is required. Now, let’s install the essential libraries for downloading and scraping YouTube data.

Use the following command to install the libraries:

pip install yt-dlp requests beautifulsoup4

With these steps, your project directory is ready, and you have all the necessary tools installed to start downloading and scraping YouTube data.

Now, let’s dive into the exciting part!

Step 2: Download YouTube Videos with Python

We’ll use the yt_dlp library to download YouTube videos. This library is a powerful tool for interacting with YouTube content with Python.

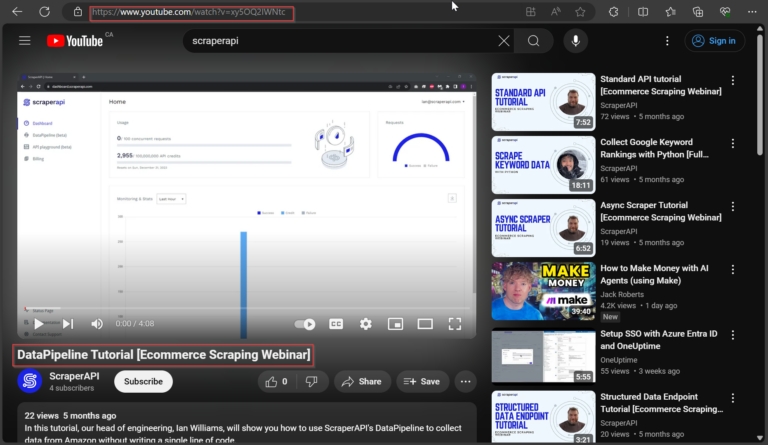

First, we’ll specify the URL of the YouTube video we want to download. You can replace the video_url variable with any YouTube video URL you wish to download.

from yt_dlp import YoutubeDL

video_url = "https://www.youtube.com/watch?v=xy5OQ2IWNtc"

After that, we’ll set up options for the downloader using a Python dictionary. In this snippet, we’ve specified two options:

'format': 'best': This option instructs the downloader to choose the best quality format available for the video'outtmpl': '%(title)s.%(ext)s': This option specifies the output filename template. Here,%(title)swill be replaced by the title of the video, and%(ext)swill be replaced by the file extension (e.g., mp4, webm).

# Set options for the downloader

opts = {

'format': 'best', # Choose the best quality format available

'outtmpl': '%(title)s.%(ext)s' # Output template for the filename

}

Finally, we create a YoutubeDL object with the specified options and use it to download the video using the download() method. The method accepts a list of URLs as its parameter so you can download multiple videos simultaneously.

Now, here’s the complete code snippet:

# Create a YoutubeDL object and download the video

with YoutubeDL(opts) as yt:

yt.download([video_url])

You can download the specified YouTube video with the desired quality and filename template by running this code snippet.

Step 3: Scrape YouTube Metadata

Important Note

The information provided here is for educational purposes only. This guide does not grant any rights to the videos or images, which may be protected by copyright, intellectual property, or other legal rights. Before downloading content, consult legal advisors and review the website’s terms of service.

Without downloading the video, we can use the yt_dlp library to get details about a YouTube video, like its title, size, channel, and language.

As an example, let’s scrape the details of this video using the extract_info() method, setting the download parameter to False so we don’t download the video again. The method will return a dictionary with all the information about the video.

Here’s a snippet for extracting the data:

from yt_dlp import YoutubeDL

video_url = "https://www.youtube.com/watch?v=xy5OQ2IWNtc"

opts = dict()

with YoutubeDL(opts) as yt:

info = yt.extract_info(video_url, download=False)

video_title = info.get("title")

width = info.get("width")

height = info.get("height")

language = info.get("language")

channel = info.get("channel")

likes = info.get("like_count")

data = {

"URL": video_url,

"Title": video_title,

"Width": width,

"Height": height,

"Language": language,

"Channel": channel,

"Likes": likes

}

print(data)

To get more details about the video, you can use other keys from the dictionary returned by extract_info(). Here are some useful ones:

| Key | Element |

id | Video ID |

title | Video title |

description | Video description |

uploader | Name of the uploader |

uploader_id | Uploader’s ID |

upload_date | Upload date (YYYYMMDD) |

duration | Duration in seconds |

view_count | Number of views |

like_count | Number of likes |

dislike_count | Number of dislikes |

comment_count | Number of comments |

thumbnail | URL of the thumbnail image |

formats | List of available formats |

subtitles | Available subtitles |

age_limit | Age restriction |

categories | Categories related to the video |

tags | Tags related to the video |

is_live | If the video is a live stream |

These keys will help you get all the information you need about a YouTube video.

Step 4: Scrape YouTube Comments

To scrape comments from a YouTube video, we’ll use the yt_dlp library to extract the comments without downloading the video itself.

In this example, we’ll be scraping the comments from this video: A brief introduction to Git for beginners

First, we import the YoutubeDL class from the yt_dlp library and specify the URL of the YouTube video we want to scrape:

from yt_dlp import YoutubeDL

# URL of the YouTube video

video_url = "https://www.youtube.com/watch?v=r8jQ9hVA2qs"

Next, we create an options dictionary opts with the key "getcomments" set to True. This tells yt_dlp that we want to extract comments:

# Options for YoutubeDL to get comments

opts = {

"getcomments": True,

}

Using the YoutubeDL class with our specified options, we extract the video information, including comments, by calling the extract_info() method we used earlier.

Remember to set the download parameter to False so we only retrieve the metadata and comments without downloading the actual video:

# Extract video information, including comments

with YoutubeDL(opts) as yt:

info = yt.extract_info(video_url, download=False)

Next, we use the get() method on the dictionary with the key "comments" to access the comments. We also retrieve the total number of comment threads using the key "comment_count":

# Get the comments

comments = info.get("comments")

thread_count = info["comment_count"]

Finally, we print the total number of comment threads and the comments themselves:

print("Number of threads: {}".format(thread_count))

print(comments)

Here is the complete code together:

from yt_dlp import YoutubeDL

# URL of the YouTube video

video_url = "https://www.youtube.com/watch?v=hzXabRASYs8"

# Options for YoutubeDL to get comments

opts = {

"getcomments": True,

}

# Extract video information, including comments

with YoutubeDL(opts) as yt:

info = yt.extract_info(video_url, download=False)

# Get the comments

comments = info.get("comments")

thread_count = info["comment_count"]

# Print the number of comment threads and the comments

print("Number of threads: {}".format(thread_count))

print(comments)

Step 5: Scrape YouTube Channel Information

To scrape information about a YouTube channel, we can use ScraperAPI to avoid getting blocked, the requests library to handle HTTP requests, and the BeautifulSoup library to parse HTML content.

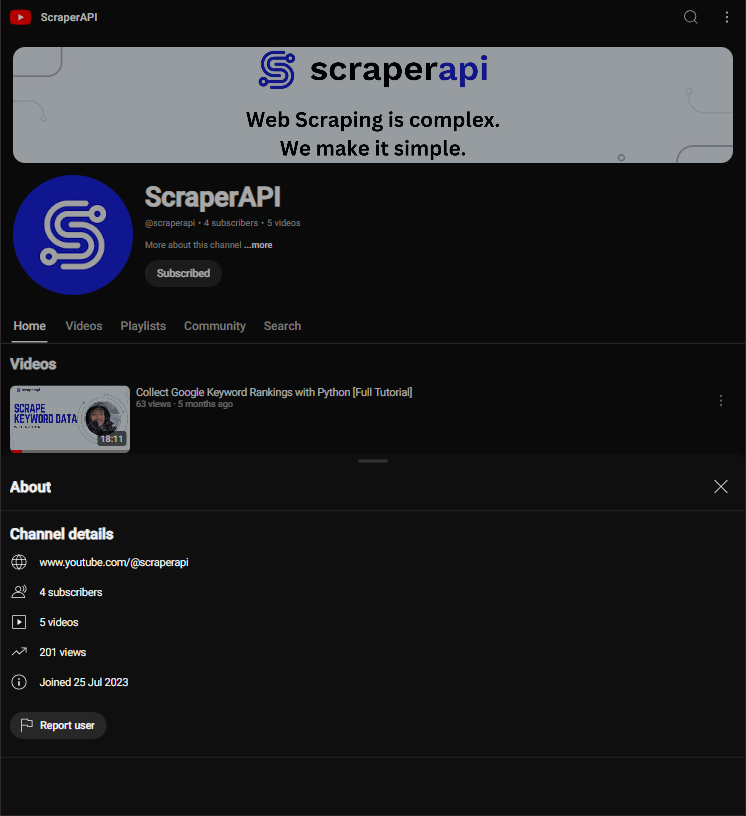

We’ll scrape ScraperAPI’s YouTube channel’s “About” page and extract the channel name and description for this example.

First, we set up the API key for ScraperAPI and the URL of the YouTube channel’s “About” page.

Note: Don’t have an API key? Create a free ScraperAPI account and get 5,000 API credits to test all our tools.

We also define the parameters needed for the API request; the render parameter being set to true allows all Javascript on the webpage to be rendered fully:

api_key = 'YOUR_API_KEY'

url = f'https://www.youtube.com/@scraperapi/about'

params = {

'api_key': api_key,

'url': url,

'render': 'true'

}

We then make a get() request to the ScraperAPI endpoint, passing in our parameters. This will allow us to bypass any restrictions and get the HTML content of the page:

response = requests.get('https://api.scraperapi.com', params=params)

If the request is successful (status code 200), we use BeautifulSoup to parse the HTML content and extract the channel’s name and description. We locate these elements using their specific HTML tags and classes we identified earlier:

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

channel_name = soup.find('yt-formatted-string', {'id': 'text', "class":"style-scope ytd-channel-name"})

channel_desc = soup.find('div', {'id': 'wrapper', "class":"style-scope ytd-channel-tagline-renderer"})

if channel_name and info:

channel_info = {

" channel_name" : channel_name.text.strip(),

"channel_desc" : channel_desc.text.strip(),

}

else:

print("Failed to retrieve channel info")

else:

print("Failed to retrieve the page:", response.status_code)

Finally, we print the extracted channel information:

print(channel_info)

Here’s the complete code all together:

import requests

from bs4 import BeautifulSoup

# API key for ScraperAPI

api_key = 'YOUR_API_KEY'

# URL of the YouTube channel's About page

url = 'https://www.youtube.com/@scraperapi/about'

# Parameters for the API request

params = {

'api_key': api_key,

'url': url,

'render': 'true'

}

# Make a GET request to ScraperAPI

response = requests.get('https://api.scraperapi.com', params=params)

# If the request is successful, parse the HTML and extract the channel info

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

channel_name = soup.find('yt-formatted-string', {'id': 'text', 'class': 'style-scope ytd-channel-name'})

channel_desc = soup.find('div', {'id': 'wrapper', 'class': 'style-scope ytd-channel-tagline-renderer'})

channel_info = {

'channel_name': channel_name.text.strip(),

'channel_desc': channel_desc.text.strip(),

}

else:

print("Failed to retrieve the page:", response.status_code)

# Print the extracted channel information

print(channel_info)

Using ScraperAPI makes it easy to retrieve web content, helping us to scrape data from websites like YouTube that may have restrictions.

ScraperAPI handles all the complexities of web scraping, such as managing IP rotation, handling CAPTCHAs, and rendering JavaScript, which can often block traditional web scraping techniques. Thus, we can focus on extracting the necessary data without worrying about these common issues.

For instance, YouTube often uses various mechanisms to prevent automated access, including rate limiting and complex page structures that require JavaScript rendering. By using ScraperAPI, we can effortlessly bypass these restrictions, allowing us to obtain data such as channel information, video details, and comments without being blocked.

How to Scrape YouTube Search Results

Scraping YouTube search results can provide valuable insights into popular content and trends related to specific keywords. In this example, we will use ScraperAPI to scrape the top YouTube search results for the query “scraperapi” and extract the videos’ titles and links.

First, we import the necessary libraries:

import requests

from bs4 import BeautifulSoup

import json

Next, we set up our ScraperAPI key and specify the search query. We also construct the URL for the YouTube search results page:

api_key = 'YOUR_API_KEY'

search_query = 'scraperapi'

url = f'https://www.youtube.com/results?search_query={search_query}'

params = {

'api_key': api_key,

'url': url,

'render': 'true',

}

We initialize an empty list called video_data to store the extracted video information and make a request to the ScraperAPI endpoint with our parameters. This will allow us to retrieve the HTML content of the search results page:

video_data = []

response = requests.get('https://api.scraperapi.com', params=params)

If the request is successful (status code 200), we parse the HTML content using BeautifulSoup:

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

videos = soup.find_all('div', {"id": "title-wrapper"})

We use soup.find_all() to find all div elements with the id of title-wrapper, which contain the video titles and links.

videos = soup.find_all('div', {"id": "title-wrapper"})

After that, we check if any video elements were found. If videos are found, we print the number of videos found and loop through each element to extract the title and link.

We get the title attribute of the anchor tag, which contains the video’s title, and the href attribute of the anchor tag, which contains the video’s relative URL. We concatenate them with the base URL “https://www.youtube.com” to form the full URL.

We then store the extracted title and link in a dictionary and append it to the video_data list.

if videos:

print(f"Found {len(videos)} videos")

for video in videos:

video_details = video.find("a", {"id": "video-title"})

if video_details:

title = video_details.get('title')

link = video_details['href']

video_info = {"title": title, "link": f"https://www.youtube.com{link}"}

video_data.append(video_info)

After extracting all video details, we save the video_data list to a JSON file for further use.

with open('videos.json', 'w') as json_file:

json.dump(video_data, json_file, indent=4)

Here’s the complete code all together:

import requests

from bs4 import BeautifulSoup

import json

# API key for ScraperAPI

api_key = 'YOUR_API_KEY'

# Search query for YouTube

search_query = 'scraperapi'

# URL of the YouTube search results page

url = f'https://www.youtube.com/results?search_query={search_query}'

# Parameters for the API request

params = {

'api_key': api_key,

'url': url,

'render': 'true',

}

# Initialize an empty list to store video data

video_data = []

# Make a GET request to ScraperAPI

response = requests.get('https://api.scraperapi.com', params=params)

# If the request is successful, parse the HTML and extract video info

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

videos = soup.find_all('div', {"id": "title-wrapper"})

if videos:

print(f"Found {len(videos)} videos")

for video in videos:

video_details = video.find("a", {"id": "video-title"})

if video_details:

title = video_details.get('title')

link = video_details['href']

video_info = {"title": title, "link": f"https://www.youtube.com{link}"}

video_data.append(video_info)

# Save the extracted video data to a JSON file

with open('videos.json', 'w') as json_file:

json.dump(video_data, json_file, indent=4)

else:

print("No videos found")

else:

print("Failed to retrieve the page:", response.status_code)

Legal Considerations When Scraping YouTube

Scraping data from websites like YouTube can offer valuable insights, but it’s crucial to be aware of the legal considerations and potential consequences. Here are some key points to keep in mind:

Terms of Service

- Compliance: Ensure that your activities comply with YouTube’s Terms of Service. Scraping content that violates these terms can lead to your IP being blocked, your YouTube account being suspended, or legal action being taken against you.

- User Agreements: Review YouTube’s user agreements and community guidelines to understand what is permissible. Unauthorized scraping can breach these agreements, leading to potential legal ramifications.

Copyright and Intellectual Property

- Content Ownership: Copyright and other intellectual property laws protect YouTube videos, thumbnails, and metadata. Scraping and using this content without proper authorization can result in copyright infringement.

- Fair Use: While some uses of copyrighted material might fall under “fair use” (e.g., for commentary, criticism, or educational purposes), this is a complex legal doctrine that varies by jurisdiction.

Data Privacy

- Personal Information: Be cautious when scraping data that includes personal information. Privacy laws, such as the General Data Protection Regulation (GDPR) in the European Union, impose strict regulations on collecting, storing, and using personal data.

- Anonymization: If you collect data that might include personal information, consider anonymizing it to protect individuals’ privacy and comply with data protection laws.

Ethical Considerations

- Respectfulness: Ethical scraping involves respecting the target website’s robots.txt file, which specifies which parts of the site should not be scraped. While not legally binding, respecting this file demonstrates good faith and ethical behavior.

- Impact: Be mindful of the impact of your scraping activities on YouTube’s servers and the user experience. High-frequency scraping can strain servers and degrade service quality for other users.

Scraping YouTube data can be beneficial but must be done responsibly and legally. Always ensure compliance with YouTube’s Terms of Service, respect copyright and intellectual property rights, protect personal data, and consider the ethical implications of your actions.

By adhering to these guidelines, you can minimize legal risks and ensure that your data scraping practices are ethical and lawful.

How to Turn YouTube Pages into LLM-Ready Data

Once you’ve scraped metadata, comments, or channel info, you can take things further by feeding that data into a large language model like Google Gemini. Instead of writing your own parsing or analysis logic, Gemini can help you automatically summarise feedback, identify recurring viewer sentiments, or extract channel themes.

To make this process seamless, ScraperAPI supports output_format=markdown, which returns YouTube pages in clean, readable Markdown, perfect for LLM input.

Step 1: Obtain and Secure Your API Keys

To get started, ensure you have a ScraperAPI key and a Google Gemini API key. If you already have them, you can skip to the next step. You can get your ScraperAPI key from your dashboard or sign up here for a free one with 5,000 API credits.

To get a Gemini API key:

- Go to Google AI Studio

- Sign in with your Google account

- Click on Create API Key

- Follow the prompts to generate your key

Step 2: Scrape YouTube as Markdown

Once you’ve secured your API keys, start by scraping a YouTube video page using ScraperAPI with render=true and output_format=markdown. This returns the page in markdown format, including video title, description, and top-level comments.

import requests

API_KEY = "YOUR_SCRAPERAPI_KEY"

url = "https://www.youtube.com/watch?v=r8jQ9hVA2qs"

payload = {

"api_key": API_KEY,

"url": url,

"render": "true",

"output_format": "markdown"

}

response = requests.get("http://api.scraperapi.com", params=payload)

markdown_data = response.text

print(markdown_data)

Here’s a snippet of how the result would look in markdown:

Chapters

View all

[ Introduction to Git and Version Control Introduction to Git and Version Control 0:00 ](/watch?v=r8jQ9hVA2qs)

[ Introduction to Git and Version Control ](/watch?v=r8jQ9hVA2qs)

0:00

[ What is Git? What is Git? 0:44 ](/watch?v=r8jQ9hVA2qs&t=44s)

[ What is Git? ](/watch?v=r8jQ9hVA2qs&t=44s)

0:44

[ Basic Git Concepts Basic Git Concepts 1:41 ](/watch?v=r8jQ9hVA2qs&t=101s)

[ Basic Git Concepts ](/watch?v=r8jQ9hVA2qs&t=101s)

1:41

[ Installing Git Installing Git 3:06 ](/watch?v=r8jQ9hVA2qs&t=186s)

[ Installing Git ](/watch?v=r8jQ9hVA2qs&t=186s)

3:06

[ Configuring Git Configuring Git 5:10 ](/watch?v=r8jQ9hVA2qs&t=310s)

[ Configuring Git ](/watch?v=r8jQ9hVA2qs&t=310s)

5:10

[ Basic Terminal and Git Commands Basic Terminal and Git Commands 5:52 ](/watch?v=r8jQ9hVA2qs&t=352s)

[ Basic Terminal and Git Commands ](/watch?v=r8jQ9hVA2qs&t=352s)

5:52

[ Difference between Git and GitHub Difference between Git and GitHub 8:29 ](/watch?v=r8jQ9hVA2qs&t=509s)

Step 3: Summarize the YouTube page with Gemini

Now that you have the Markdown, you can feed it to Google Gemini and extract a clean summary.

Start by installing the Gemini SDK if you haven’t already:

pip install google-generativeai

Now you can request a summary from Gemini using a custom prompt:

import google.generativeai as genai

genai.configure(api_key="YOUR_GEMINI_API_KEY")

model = genai.GenerativeModel(model_name="gemini-1.5-flash")

prompt = f"""

You're a content strategist. Based on the following YouTube video page, summarise:

- The topic of the video

- Key themes from the description and comments

- Overall viewer sentiment (positive/negative/mixed) if available

- Any common suggestions or complaints from viewers if available

Here's the data:

{markdown_data}

"""

response = model.generate_content(prompt)

print(response.text)

Gemini will give you a similar response to this:

Based on the provided YouTube video page data:

**Topic and Intent of the Video:**

The video is a beginner's introduction to Git, a version control system commonly used in software development. The intent is to teach viewers the fundamentals of Git, enabling them to use it in their projects. It aims to provide a solid foundational understanding.

**Key Points Mentioned in the Description:**

The description highlights that the video covers:

* Fundamentals of Git

* Installation of Git

* Configuration of Git

* Essential concepts like staging, committing, and branching.

* A "GitHub for Beginners" video series is implied.

* The presenter is identified as GitHub Developer Advocate Kedasha Kerr.

The description also includes links to further resources on Git and GitHub, including documentation and a GitHub community forum.

[TRUNCATED]

Using output_format=markdown means you can skip manual parsing and work directly with clean, structured YouTube data. You can feed this output into Gemini to summarise what a video is about, extract key points from the description, and, when available, analyse viewer sentiment and highlight recurring feedback from comments. Even if comments aren’t included, you can still generate valuable summaries of a video’s purpose, structure, and external links. With just one ScraperAPI call and a Gemini prompt, you go from raw page data to clear, actionable insights, ideal for content strategy, competitor research, or audience analysis.

Wrapping Up – How to Scrape Videos from YouTube

In this Youtube scraping guide, you’ve learned:

- How to use Python to scrape valuable YouTube data, including video metadata, comments, channel information, and search results

- Leverage libraries such as yt-dlp, Requests, and BeautifulSoup to efficiently gather and analyze a wealth of information from YouTube while navigating the complexities of web scraping

- Use ScraperAPI to bypass anti-bot protections and build resilient data pipelines

Now that you have the skills to scrape YouTube data, here are some ideas on what to do with this data:

- Content Analysis: To optimize your video strategies and perform in-depth content performance and audience engagement analyses.

- Market Research: Use search results and metadata to understand current trends and what types of content resonate with your target audience

- Sentiment Analysis: Analyze comments to gauge audience reactions and opinions on various topics or videos

- Competitive Analysis: You can use the channel data to study competitor channels and their content to identify market strengths, weaknesses, and opportunities.

To streamline and enhance your web scraping projects, sign up for a free ScraperAPI account. With ScraperAPI, you can easily bypass IP blocks, handle CAPTCHAs, and render JavaScript, ensuring your scraping activities are efficient and uninterrupted.

Remember always to respect copyright and legal considerations when scraping and using data from YouTube.

Until next time, happy scraping!