Web scrapers are powerful tools capable of extracting data faster and at a larger scale than humans can. They can be used to compare prices between different vendors, extract information about potential leads marketing teams can target, doing in-depth competitive analysis, and more.

However, because scrapers and spiders – especially if they are badly implemented – can generate a huge amount of traffic, they can overload the website’s server, hurting real users in the process.

To fight back, a lot of site owners and webmasters use different methods to identify and ban crawlers and keep their data from being collected.

In this article, we’ll talk about the roadblocks every developer will face when scraping the web and how you can use ScraperAPI to work around them in minutes.

Main Challenges When Scraping Websites at Scale

Let’s explore the 5 most common challenges you’ll face when scraping the web at a large scale:

1. Client-side Rendering

So you’ve visually inspected the website you want to scrape, identified the elements you’ll need, and run your script. The problem is that scrapers can only extract data from what they can find in the HTML file, and not dynamically injected content.

This is the most common roadblock you’ll find when scraping JavaScript-heavy websites. Because AJAX calls or JavaScript are executed at runtime, it makes it impossible for regular scrapers to extract the necessary data.

2. Anti-scraping Techniques

There are several ways websites protect their data from scraping scripts. These techniques analyze a number of metrics and patterns to make sure it is a human who is browsing the site and not a robot.

A simple example of this is analyzing the number of requests from the client. If a client makes too many requests within a particular time frame or there are too many parallel requests from the same IP, the server can go ahead and blacklist the client.

Servers can also measure the number of repetitions and find request patterns (X number of requests at every Y seconds). By defining a threshold, the server can automatically blacklist any client exceeding it.

3. Honeypots

Honeypots are link traps webmasters can add to the HTML file that are hidden from humans but can be accessed by web crawlers. This is as simple as adding a CSS property of display:none to the link or blending it to the background. When a web scraper accesses the link, the server can determine it is a robot and not a human, and blacklist the client over time.

4. CAPTCHAs

By redirecting the request to a page with a CAPTCHA, the server creates a challenge our web scraper needs to solve to prove it’s human. This is an effective security mechanism and prevents automated programs from accessing the page.

5. Browser Behaviour Profiling

Because servers can measure how a client interacts with the site, anti-bot mechanisms can spot patterns in the number of clicks, clicks’ location, the interval between clicks, and other metrics and use this information to blacklist a client.

Most of these challenges are easy to work around using ScraperAPI as long as you’re setting the scraper correctly.

To help you build a scraper more efficiently and avoid bans, you need to make sure you’re implementing a set of best practices.

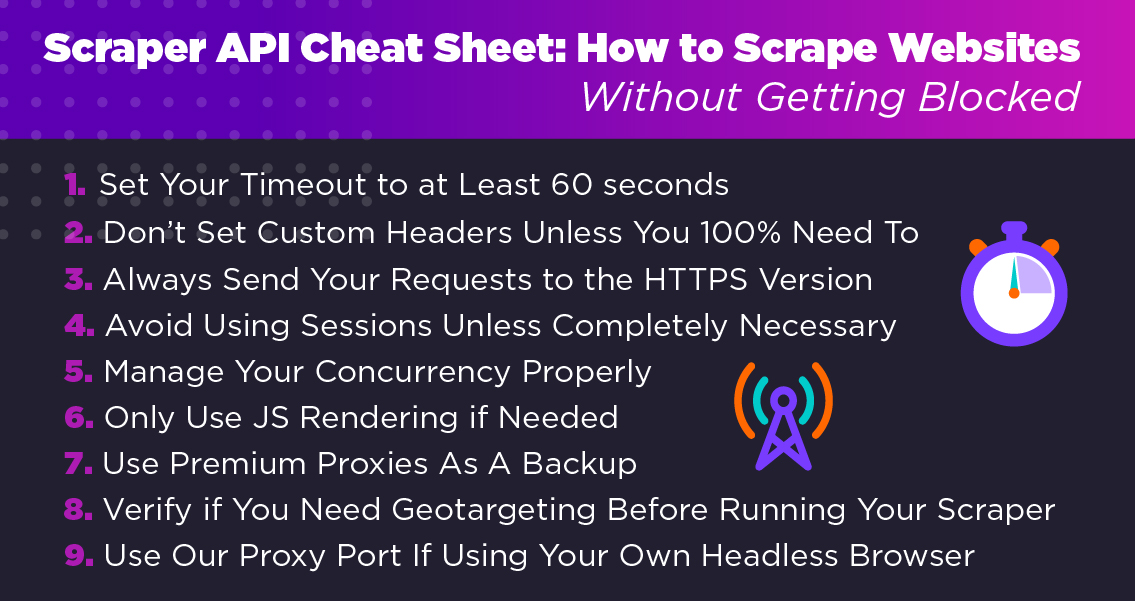

ScraperAPI Cheat Sheet: How to Scrape Websites Without Getting Blocked

ScraperAPI is a proxy solution created to make it easier for developers to scrape the web at scale without the hassle of handling CAPTCHAs, javascript rendering, and rotating proxy pools.

However, it is still important to follow web scraping best practices to ensure you’re getting the most out of the API and not hitting any roadblock in your project.

1. Set Your Timeout to at Least 60 seconds

ScraperAPI handles everything to do with proxy/user agent selection and rotation, so when you send a request to the API, we will keep retrying that request with different proxy/user agent configurations for up to 60 seconds before returning a failed response to you. As a result, when using the API you should always set your timeout to at least 60 seconds.

If you set a shorter timeout period, the connection will be cut off on your end but the API will keep retrying the request until the 60-second timeout is met. Because the API is returning successful requests, these requests will still be counted against your monthly limit.

2. Don’t Set Custom Headers Unless You 100% Need To

ScraperAPI has a sophisticated system to automatically generate and select the optimal user agents and cookies for every website, so unless you really need to set your own headers to retrieve your target data, then it’s better to keep it as is.

This will prevent any performance drop and will keep you safe from any header inspection from the website’s server.

3. Always Send Your Requests to the HTTPS Version

Unless the website is only available with HTTP – which is uncommon these days – you should always send your requests to the HTTPS version to avoid duplicate requests caused by a redirection.

In other words, when the HTTP client sends a request to the HTTP version of the site, it will redirect to the secure version (HTTPS), making the server read this as two requests; thus, increasing the chances for the request to be flagged as a scraper.

4. Avoid Using Sessions Unless Completely Necessary

Using the API’s sessions functionality is another cause of higher failure rates. Because our sessions pool is much smaller than the main proxy pools, it can quickly get burnt out if overused by a single user.

We recommend using this feature only if you strictly need to use the same proxy for multiple requests.

5. Manage Your Concurrency Properly

There’s a limited number of concurrent threads set for each plan (i.e. 50 concurrent threads in the business plan) which limits the number of parallel requests you can make to the API.

Of course, being able to do more parallel requests means faster scraping times as you can get more HTML responses per minute.

It might not be a problem for small scraping projects but can become a bottleneck if you handle a large number of distributed scrapers. In this case, we recommend setting a central cache like Redis, which is a great way to ensure all your scrapers stay within your plan’s concurrency limits.

6. Only Use JS Rendering if Needed

One of the most important features of our API is automated JavaScript rendering. Just add the flag &render=true to your request and we’ll handle the rest.

However, JS requests take longer to process and may slow down your requests, reducing the number of retries we can make internally before returning a failed response.

By default, there is a 3-requests-per-second burst limit when using JS rendering, so you need to configure your scrapers to stay under this limit along with your plan’s overall concurrency limit.

7. Use Premium Proxies As A Backup

Our API squeezes the maximum performance out of our data center proxies, which are perfect for scraping 99% of all websites. On the other hand, premium proxies are charged 10x the price of our standards proxies, which definitely adds a lot of unnecessary overhead.

A good best practice is to set your script to use premium proxies only if the request still fails after multiple retries with the standard proxies. That way you get the data you need without overspending.

8. Verify if You Need Geotargeting Before Running Your Scraper

Some websites (like Amazon and Google) return different data depending on where the request is coming from. If you need data from a specific geographic location (i.e. if you need to scrape Amazon results from Italy but you’re based in the U.S.) you’ll need to geotarget the request using the country_code query parameter.

Check out a list of all the geotargeting codes ScraperAPI supports.

9. Use Our Proxy Port If Using Your Own Headless Browser

While ScraperAPI provides a built-in headless browser that you can enable by setting render=true, it is only intended to make it easy to access data that is dynamically injected into the page with Javascript after the initial HTML response.

If you want to be able to interact with the page (click on a button, scroll, etc.) then you will need to use your own Selenium, Puppeteer, or Nightmare headless browser. When doing so you should always configure your scraper to send its requests to our proxy port, not the API endpoint; otherwise, your headless browser might not work correctly.

If you’re building a more traditional scraper, here are 10 web scraping tips you can follow to write a scraper that treats websites kindly and is virtually undetectable.

But if you’re looking for a solution that can handle most web scraping bottlenecks and challenges from just a simple API request, you can check our plans and pick the one that best fits your needs.

Happy scraping!