Whether you’re a developer looking to automate job data collection, a data analyst seeking valuable insights from job trends, or a recruiter striving to find top talent, scraping Google Jobs can significantly enhance your efforts.

In this article, you’ll learn how to:

- Build a powerful Google Jobs scraper using Python and ScraperAPI

- Turn Google Jobs listings into structured JSON and CSV data

- Streamline your job data collection process, reducing costs and development time

Let’s dive in and discover how you can transform your job data access and analysis with this invaluable tool.

ScraperAPI returns Google Jobs search results in JSON or CSV format using only search queries. No complicated setup.

TL;DR: Google Jobs Python Scraper

For those in a hurry, here’s a quick way to scrape Google Jobs for multiple queries using Python and ScraperAPI:

import requests

queries = ['video editor', 'python developer']

for query in queries:

payload = {

'api_key': 'YOUR_API_KEY',

'query': query,

'country_code': 'us',

'output_format': 'csv'

}

response = requests.get('https://api.scraperapi.com/structured/google/jobs', params=payload)

if response.status_code == 200:

filename = f'{query.replace(" ", "_")}_jobs_data.csv'

with open(filename, 'w', newline='', encoding='utf-8') as file:

file.write(response.text)

print(f"Data for {query} saved to {filename}")

else:

print(f"Error {response.status_code} for query {query}: {response.text}")

This scraper iterates through a list of job queries, sending requests through ScraperAPI’s Google Jobs endpoint and saves the results as CSV files.

Want to learn how it was built? Keep reading!

The Challenges of Scraping Google Jobs

Google Jobs (or Google for Jobs) is one of the world’s most significant sources of job listings. It gathers job listings from millions of websites in one place, allowing job seekers to find the right opportunity in Google search results.

Because these results are publicly available, we can scrape Google Jobs listings legally, following ethical practices to avoid overwhelming the service.

However, there are a couple of challenges that make this task harder:

- Google anti-scraping mechanisms: Being part of Google services, Google Jobs has advanced bot detection systems that can quickly pick when a human makes a request. This can include CAPTCHA challenges and rate limiting, to mention a few.

- JavaSscript rendering: If you inspect the website, you’ll notice that the data you see on your screen is not actually contained in the site’s HTML. Instead, it is dynamically injected via JavaScript. This adds another layer of complexity, forcing us to find a way to render the page before being able to access any data.

- Infinite scrolling: Connected to the previous challenges, new Google Jobs listings are only loaded to the page when scrolling to the bottom. In other words, we also need to interact with the page if we want to collect a significant amount of data.

- Geo-specific data: Although it is not a challenge per se, Google Jobs shows different results based on your IP location. If you want, for example, to scrape data from the US but are based in Italy like me, you’ll need to find a way to change your IP location (geotargeting).

To overcome these challenges – and any other blocker in our way – I’ll use ScraperAPI to:

- Access a pool of +40M proxies across +50 countries

- Automatically rotate my proxies to ensure a high success rate

- Turn raw Google Jobs HTML data into structured JSON data

Scraping Google Jobs with Python and ScraperAPI

ScraperAPI offers a simple-to-use Google Jobs endpoint to collect job listings for any query we need without building any complex infrastructure or using a headless browser.

By sending our requests through the endpoint, ScraperAPI will handle JS rendering, CAPTCHA handling, and proxy rotation for us and return job data in JSON format, saving us development hours, data parsing, and cleaning.

For this example project, let’s collect data for the query “video editor”.

Google Jobs Layout Overview

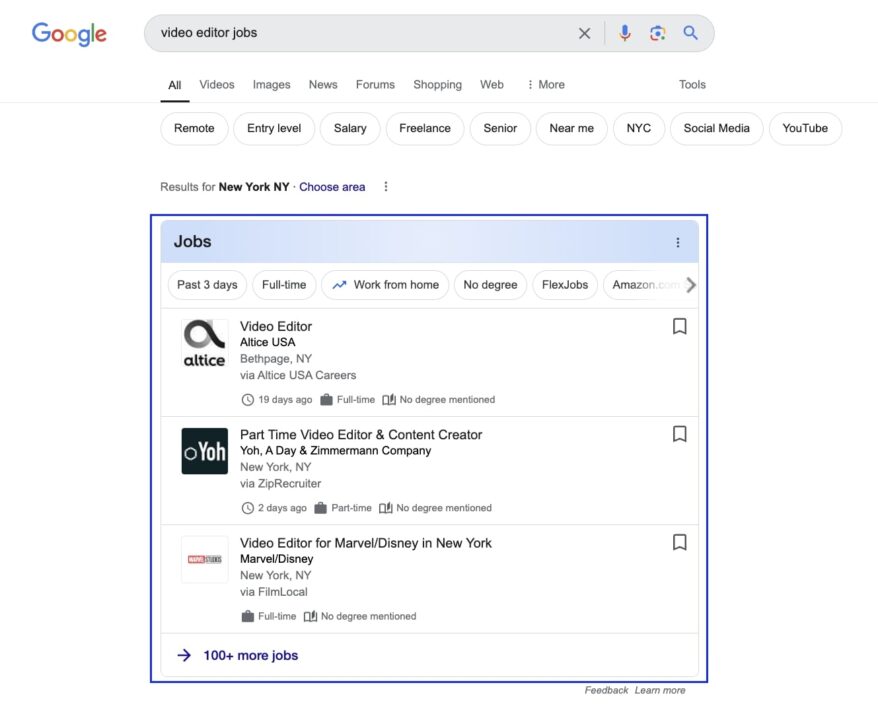

If you navigate to Google and search for video editor jobs, you’ll find yourself on a page similar to this one:

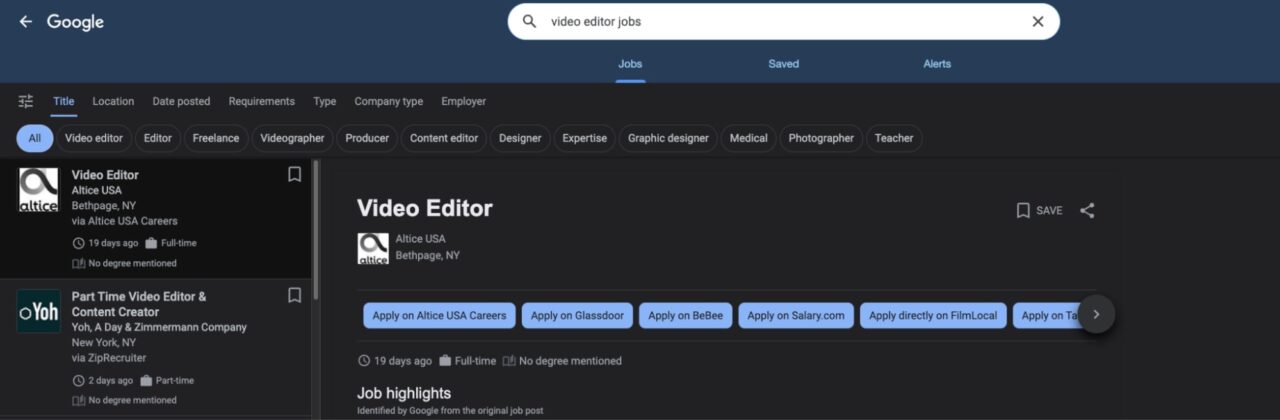

Clicking on the box will lead you to the main Google Jobs interface. All job listings are listed on the left, and more details are shown on the right.

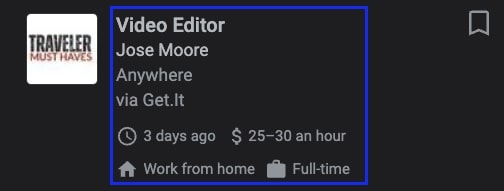

From here, we can access some basic information on the left card, like job title, company offering the position, job location, and extra details, such as how long the job opportunity was posted and salary.

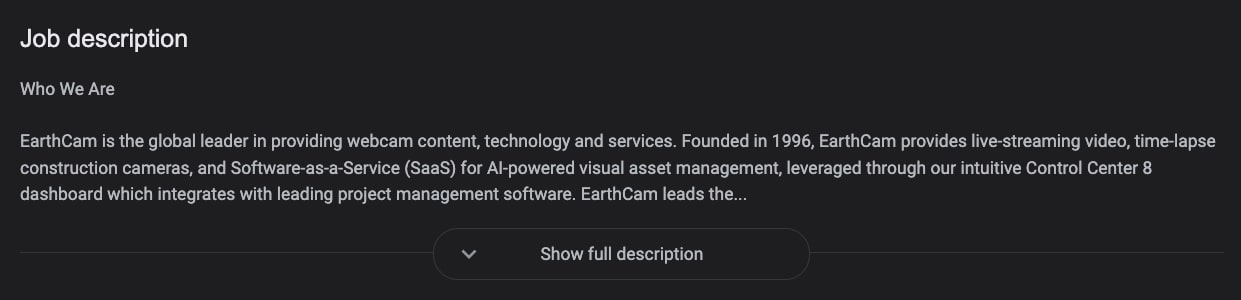

Of course, we can get even more data from the job listing itself, although the most relevant element would be the job description on the right, which will give us all the context we need from the job opportunity.

Also, you have to remember that all of this information is dynamically injected into the page through JavaScript, so a regular script won’t be able to see the page as we do, and even after rendering, we still need to perform certain actions to get the full picture.

A good example is the job description above. To be able to collect the entire job description, we would need to click on the Show full description button.

However, by using ScraperAPI’s Google Jobs endpoint, we’ll be able to get the:

- Job listing URL

- Company name

- Job title

- Description

And additional details without performing any page interactions – so no headless browser or complex workaround.

Most importantly, you won’t need to maintain your parsers, as the ScraperAPI dev team will ensure your scrapers keep running by monitoring Google Jobs site changes and quickly adapting to new layouts and challenges.

Project requirements

Before we start writing our script, ensure you have Python and the Requests library installed on your machine.

To install Requests, use the following command:

pip install requests

You’ll also need to create a free ScraperAPI account to get access to your API key – which you can access from your dashboard.

That’s it, we’re now ready to get started!

Step 1: Setting up your Google Jobs scraping project

First, create a new directory for your project and a new Python file inside. I’ll call it google-jobs-scraper.py.

At the top of the file, import requests and json. We’ll use the latter to export our data.

import requests

import json

The Google jobs endpoint works by passing your API key, the query you want data for, and the country from which you’d like your requests to come. We’ll pass all of this information in a payload.

payload = {

'api_key': 'YOUR_API_KEY',

'query': 'video editor',

'country_code': 'us'

}

Note: Add your real API key to the api_key parameter before running your script.

I also set our proxies to the US to get US-based job listings. Otherwise, ScraperAPI could use proxies from different countries, messing with the accuracy of our data. If location isn’t important to you, ignore this suggestion.

To control geolocation even more, you can also target a specific Google TLD using the tld parameter like so:

payload = {

'api_key': 'YOUR_API_KEY',

'query': 'video editor',

'country_code': 'us',

'tld': '.com'

}

This is especially useful if you want to see how the search results change with different combinations, like using UK proxies but targeting Google’s .com TLD.

If not set, ScraperAPI will target the .com TLD by default.

Step 2: Send a get() request to the Google Jobs endpoint

Now that the payload is ready, send a get() request to the endpoint, passing the payload as params.

response = requests.get('https://api.scraperapi.com/structured/google/jobs', params=payload)

ScraperAPI will use your query to perform the search on Google Jobs, render the page, and return all relevant data points in JSON format.

Step 3: Export All Job Listings Into a JSON File

Because the endpoint returns a JSON response, we can store the data in an all_jobs variable and then export it into a file using the dump() method from json.

all_jobs = response.json()

with open('google-jobs', 'w') as f:

json.dump(all_jobs, f)

Here’s what you’ll get:

{

"url": "https://www.google.com/search?ibp=htl;jobs&q=video+editor&gl=US&hl=en&uule=w+CAIQICIgSHViZXIgSGVpZ2h0cyxPaGlvLFVuaXRlZCBTdGF0ZXM=",

"scraper_name": "google-jobs",

"jobs_results": [

{

"title": "Freelance Video Editor for Long Form Video",

"company_name": "Upwork",

"location": "Anywhere",

"link": "https://www.google.com/search?ibp=htl;jobs&q=video+editor[TRUNCATED]",

"via": "Upwork",

"description": "We are looking for a talented freelance video editor to work on a long form style video. [TRUNCATED]",

"extensions": [

"Posted 16 hours ago",

"Work from home",

"Employment Type Contractor and Temp work",

"Qualification No degree mentioned"

]

}, //TRUNCATED

Note: See what a full Google Jobs response looks like.

Step 3.2: Export Google Job Listings as CSV

The Google Jobs endpoint also takes care of transforming the scraped data into tabular data, making it super simple to export job listings into a CSV file.

First, let’s set the output parameter to csv in our payload:

payload = {

'api_key': credentials.api_key,

'query': 'video editor',

'country_code': 'us',

'tld': '.com',

'output_format': 'csv'

}

Note: If the output_format is not set, the endpoint defaults to JSON format.

Then, we can create the CSV file using the text from the response:

response = requests.get('https://api.scraperapi.com/structured/google/jobs', params=payload)

if response.status_code == 200:

filename = 'jobs_data.csv'

# Save the response content to a CSV file

with open(filename, 'w', newline='', encoding='utf-8') as file:

file.write(response.text)

print(f"Data saved to {filename}")

else:

print(f"Error {response.status_code}: {response.text}")

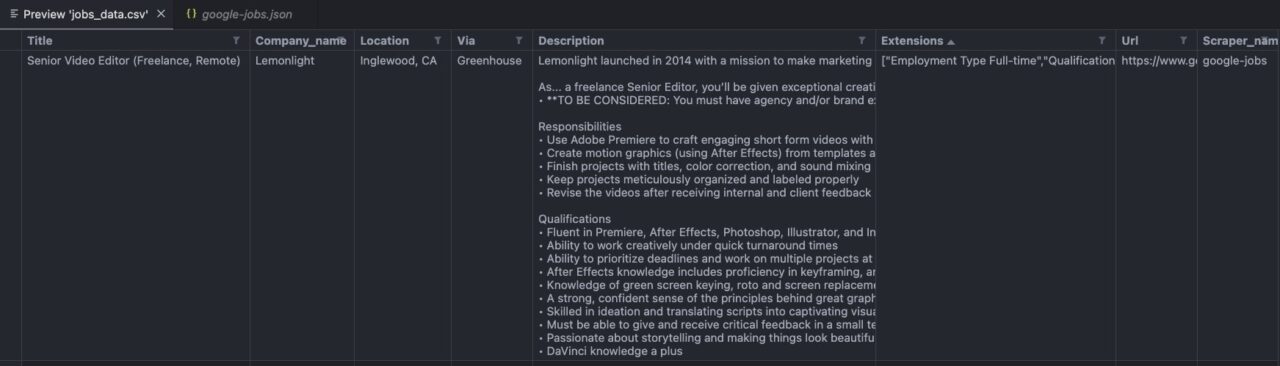

Here’s how your file will look like:

Note: The image above is from my VScode editor preview.

ScraperAPI lets you bypass all major bot blockers, giving you access to data from Google Jobs, LinkedIn, Glassdoor, and more.

Scraping Google Jobs Listings from Multiple Queries

In most projects, you’ll want to monitor more than just one query at a time. There are several approaches to accomplish this, but for the sake of simplicity, let’s use a for loop and tweak a little bit our code.

First, create a new variable for your queries. In my case, I’ll use video editor and python developer.

queries = ['video editor', 'python developer']

Next, move all your code inside a for loop to iterate through each query, passing each one to the query parameter inside the payload.

for query in queries:

payload = {

'api_key': 'YOUR_API_KEY',

'query': query,

'country_code': 'us',

'tld': '.com',

'output_format': 'csv'

}

response = requests.get('https://api.scraperapi.com/structured/google/jobs', params=payload)

if response.status_code == 200:

filename = f'{query.replace(" ", "_")}_jobs_data.csv'

with open(filename, 'w', newline='', encoding='utf-8') as file:

file.write(response.text)

print(f"Data for {query} saved to {filename}")

else:

print(f"Error {response.status_code} for query {query}: {response.text}")

By using a loop like this, you can efficiently scrape job listings for multiple queries, saving each set of results in a separate CSV file. This approach allows you to monitor various job markets or roles simultaneously, ensuring you have a broad and comprehensive dataset to work with.

Automate Google Jobs Scraping Projects

To further streamline your job scraping process, you can automate your Google Jobs scraping tasks using ScraperAPI’s DataPipeline endpoint. This allows you to set up scraping projects programmatically and manage them with ease.

Below is an example demonstrating how to create a Google Jobs project using the DataPipeline endpoint.

First, you’ll need to import the necessary libraries and set up your API request:

import requests

import json

url = 'https://datapipeline.scraperapi.com/api/projects?api_key=YOUR_API_KEY'

headers = {'content-type': 'application/json'}

For example, set the scraping interval to daily and set the next scheduled time to now, so it would scrape daily when you run the script for the first time.

data = {

"name": "Google jobs project",

"projectInput": {

"type": "list",

"list": ["NodeJS", "Python Developer", "Data Scientist"]

},

"projectType": "google_jobs",

"schedulingEnabled": True,

'scheduledAt': 'now',

"scrapingInterval": "daily", #continue...

Enhance your setup by configuring notification settings to receive updates on the project’s status. Choose to be notified of the success or failure of each scraping task.

"notificationConfig": {

"notifyOnSuccess": "with_every_run",

"notifyOnFailure": "with_every_run"

},

Also, include a webhookOutput which allows you to send the scraped data directly to a specified URL, which can be integrated into your projects for real-time data processing.

"webhookOutput": { "url": "https://your-webhook-url.com", "webhookEncoding": "application/json" }

} #closing "data"

Finally, send the request to create the automated scraping project. This API call sets everything into motion, ensuring your specified job queries are scraped at the defined intervals.

response = requests.post(url, headers=headers, data=json.dumps(data))

print(response.text)

Pro Tip:

Using webhooks enables seamless integration of the scraped data into your workflow, such as triggering data analysis or updates. For more information on setting up your own webhook, check out this article on automating web scraping.

Here’s an example of the response:

{"id":96424,"name":"Google jobs project","schedulingEnabled":true,"scrapingInterval":"daily","createdAt":"2024-07-30T19:13:57.732Z","scheduledAt":"2024-07-30T19:13:57.732Z","projectType":"google_jobs","projectInput":{"type":"list","list":["NodeJS","Python Developer","Data Scientist"]},"notificationConfig":{"notifyOnSuccess":"with_every_run","notifyOnFailure":"with_every_run"}}

You can access and manage your project results through the ScraperAPI dashboard. For this example, you would visit https://dashboard.scraperapi.com/projects/{project-id} to download the scraped data.

This setup not only automates data collection but also provides convenient access to up-to-date job listings, helping you stay informed about market trends and opportunities.

BANNER

Discover how DataPipeline can help you scale your data collection processes while reducing costs and development time.

Wrapping Up: Before Scraping Google Jobs

In this article, we’ve explored how to scrape Google Jobs listings efficiently by leveraging ScraperAPI’s capabilities to automate job data collection and overcome challenges like CAPTCHA, JavaScript rendering, and bot blockers.

Whether you’re a developer, data analyst, or recruiter, this setup provides a powerful solution for accessing up-to-date job market data.

That said, here are a couple of considerations for your project:

- Set your scraping jobs to run at least once a week, as job listings change quickly, and your dataset can become outdated

- With DataPipeline, you can monitor up to 10,000 search queries per project, so make sure to fill it up before creating a new project

- You can send a

get()request tohttps://datapipeline.scraperapi.com/api/projectsto get a list of all your existing projects

Learn more about DataPipeline endpoints in our documentation.

Until next time, happy scraping!