In real estate, having the latest property information is crucial. Whether you’re looking for a home to buy or rent or working in the real estate industry, scraping Idealista.com can provide you with relevant and sufficient data on properties to make better investment decisions.

However, Idealista uses many anti-bot mechanisms to prevent you from getting the data programmatically, making it more challenging to collect this data at scale.

Collect property listing from all major real estate platforms including Idealista, Zillow, Realtor, and more with a simple API call.

In this article, we’ll show you how to build an Idealista scraper to extract property listing details like prices, locations, and more, using Python and ScraperAPI to avoid getting blocked.

Whether you’re a tech newbie or a coding pro, we aim to make this guide easy to follow. You’ll soon be tapping into Idealista.com’s data effortlessly.

Ready to dive in? Let’s go!

TL;DR: Full Idealista.com Scraper

For those in a hurry, here is the complete code:

import json

from datetime import datetime

import requests

from bs4 import BeautifulSoup

scraper_api_key = 'api_key'

idealista_query = "https://www.idealista.com/en/venta-viviendas/barcelona-barcelona/"

scraper_api_url = f'http://api.scraperapi.com/?api_key={scraper_api_key}&url={idealista_query}'

response = requests.get(scraper_api_url)

# Check if the request was successful (status code 200)

if response.status_code == 200:

# Parse the HTML content using BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Extract each house listing post

house_listings = soup.find_all('article', class_='item')

# Create a list to store extracted information

extracted_data = []

# Loop through each house listing and extract information

for index, listing in enumerate(house_listings):

# Extracting relevant information

title = listing.find('a', class_='item-link').get('title')

price = listing.find('span', class_='item-price').text.strip()

# Find all div elements with class 'item-detail'

item_details = listing.find_all('span', class_='item-detail')

# Extracting bedrooms and area from the item_details

bedrooms = item_details[0].text.strip() if item_details and item_details[0] else "N/A"

area = item_details[1].text.strip() if len(item_details) > 1 and item_details[1] else "N/A"

description = listing.find('div', class_='item-description').text.strip() if listing.find('div', class_='item-description') else "N/A"

tags = listing.find('span', class_='listing-tags').text.strip() if listing.find('span', class_='listing-tags') else "N/A"

# Extracting images

image_elements = listing.find_all('img')

print(image_elements)

image_tags = [img.prettify() for img in image_elements] if image_elements else []

print(image_tags)

# Store extracted information in a dictionary

listing_data = {

"Title": title,

"Price": price,

"Bedrooms": bedrooms,

"Area": area,

"Description": description,

"Tags": tags,

"Image Tags": image_tags

}

# Append the dictionary to the list

extracted_data.append(listing_data)

# Print or save the extracted information (you can modify this part as needed)

print(f"Listing {index + 1}:")

print(f"Title: {title}")

print(f"Price: {price}")

print(f"Bedrooms: {bedrooms}")

print(f"Area: {area}")

print(f"Description: {description}")

print(f"Tags: {tags}")

print(f"Image Tags: {', '.join(image_tags)}")

print("=" * 50)

# Save the extracted data to a JSON file

current_datetime = datetime.now().strftime("%Y%m%d%H%M%S")

json_filename = f"extracted_data_{current_datetime}.json"

with open(json_filename, "w", encoding="utf-8") as json_file:

json.dump(extracted_data, json_file, ensure_ascii=False, indent=2)

print(f"Extracted data saved to {json_filename}")

else:

print(f"Error: Unable to retrieve HTML content. Status code: {response.status_code}")

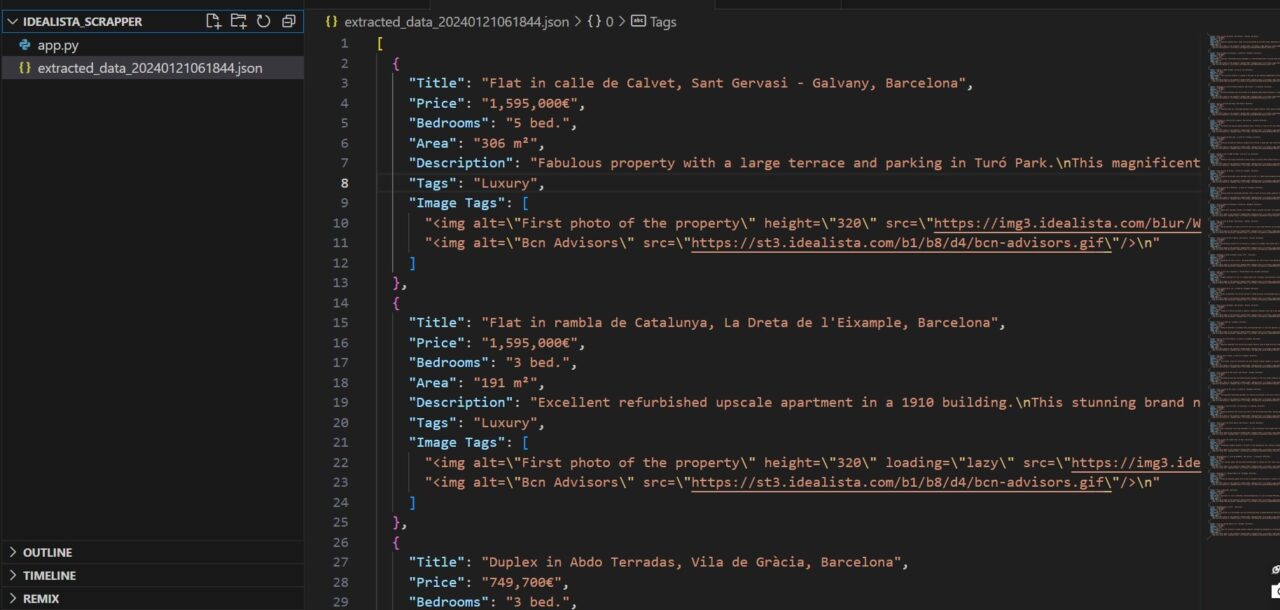

After running the code, you should have the data dumped in the extracted_data(datetime).json file.

The image below shows how your extracted_data(datetime).json file should look like.

Note: Before running the code, add your API key to the scraper_api_key parameter. If you don’t have one, create a free ScraperAPI account to get 5,000 API credits and access to your unique key.

Scraping Idealista Real estate listings

For this article, we’ll focus on Barcelona Real estate listings on the Idealista website: https://www.idealista.com/en/venta-viviendas/barcelona-barcelona/ and extract information like Titles, Prices, Bedrooms, Areas, Descriptions, Tags, and Images.

Project Requirements

You will need Python 3.8 or newer – ensure you have a supported version before continuing – and you’ll need to install Request and BeautifulSoup4.

- The Request library helps you to download Idealista’s post search results page.

- BS4 makes it simple to scrape information from the downloaded HTML.

Install both of these libraries using PIP:

pip install requests bs4

Now, create a new directory and a Python file to store all of the code for this tutorial:

mkdir Idealista_Scrapper

echo. > app.py

You are now ready to go on to the next steps!

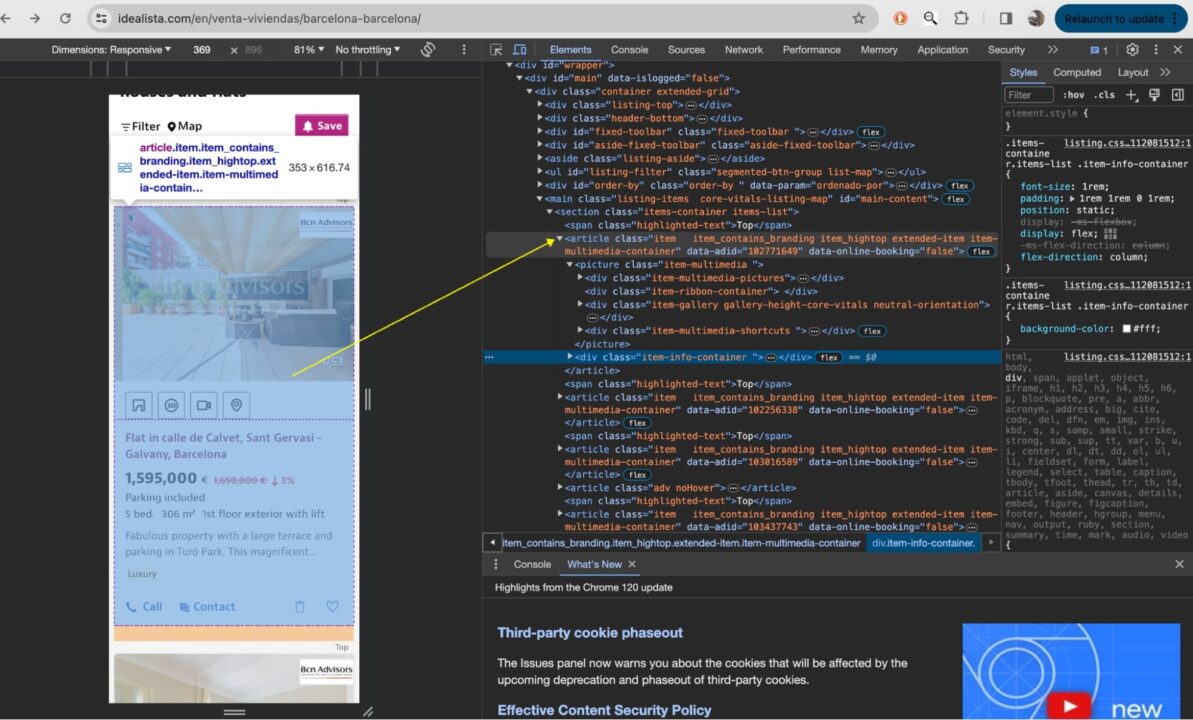

Understanding Idealists’s Website Layout

To understand the Idealista website, inspect it to see all the HTML elements and CSS properties.

The HTML structure shows that each listing is wrapped in an <article> element. This is what we’ll target to get the listings from Idealista.

The <article> element contains all the property features we need. You can expand the entire structure fully to see them.

Using ScraperAPI

Idealista is known for blocking scrapers from its website, making collecting data at any meaningful scale challenging. For that reason, we’ll be sending our get() requests through ScraperAPI, effectively bypassing Idealista’s anti-scraping mechanisms without complicated workarounds.

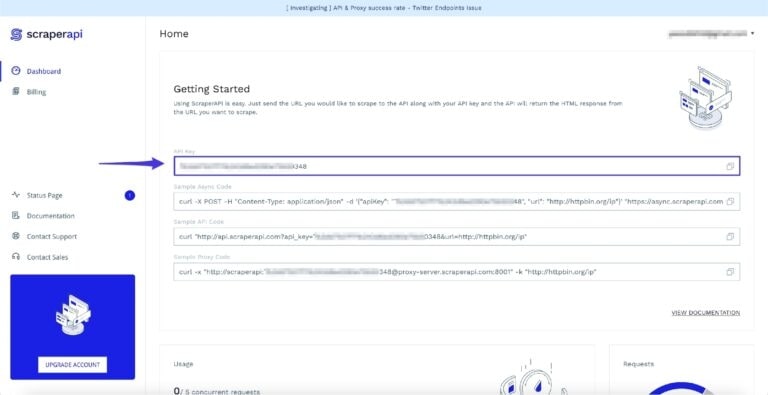

To get started, create a free ScraperAPI account to access your API key.

You’ll receive 5,000 free API credits for a seven-day trial, starting whenever you’re ready.

Now that you have your ScraperAPI account let’s get down to business!!

Step 1: Importing Your Libraries

For any of this to work, you must import the necessary Python libraries: json, request, and BeautifulSoup.

import json

from datetime import datetime

import requests

from bs4 import BeautifulSoup

Next, create a variable to store your API key

scraper_api_key = 'ENTER KEY HERE'

Step 2: Downloading Idealista.com’s HTML

ScraperAPI serves as a crucial intermediary, mitigating common challenges encountered during web scraping, such as handling CAPTCHAs, managing IP bans, and ensuring consistent access to target websites.

By routing our requests through ScraperAPI, we offload the intricacies of handling these obstacles to a dedicated service, allowing us to focus on extracting the desired data seamlessly.

Let’s start by adding our initial URL to idealista_query and constructing our get() requests using ScraperAPI’s API endpoint.

idealista_query = f"https://www.idealista.com/en/venta-viviendas/barcelona-barcelona/"

scraper_api_url = f'http://api.scraperapi.com/?api_key={scraper_api_key}&url={idealista_query}'

r = requests.get(scraper_api_url)

Collecting thousands of property listings can be challenging. Let our team of experts build a custom plan that fits your business.

Step 3: Parsing Idealista.com

Next, let’s parse Idealista’s HTML (using BeautifulSoup), creating a soup object we can now use to select specific elements from the page:

soup = BeautifulSoup(r.content, 'html.parser')

articles = soup.find_all('article', class_='item')

Specifically, we’re searching for all <article> elements with a CSS class of item using the find_all() method. The resulting list of articles is assigned to the variable house_listings, which contains each post.

Resource: CSS Selectors Cheat Sheet.

Step 4: Scraping Idealista Listing Information

Now that you have scraped all <article> elements containing each listing, it is time to extract each property and its information!

# Loop through each house listing and extract information

for index, listing in enumerate(house_listings):

# Extracting relevant information

title = listing.find('a', class_='item-link').get('title')

price = listing.find('span', class_='item-price').text.strip()

# Find all div elements with class 'item-detail'

item_details = listing.find_all('span', class_='item-detail')

# Extracting bedrooms and area from the item_details

bedrooms = item_details[0].text.strip() if item_details and item_details[0] else "N/A"

area = item_details[1].text.strip() if len(item_details) > 1 and item_details[1] else "N/A"

description = listing.find('div', class_='item-description').text.strip() if listing.find('div', class_='item-description') else "N/A"

tags = listing.find('span', class_='listing-tags').text.strip() if listing.find('span', class_='listing-tags') else "N/A"

# Extracting images

image_elements = listing.find_all('img')

print(image_elements)

image_tags = [img.prettify() for img in image_elements] if image_elements else []

print(image_tags)

# Store extracted information in a dictionary

listing_data = {

"Title": title,

"Price": price,

"Bedrooms": bedrooms,

"Area": area,

"Description": description,

"Tags": tags,

"Image Tags": image_tags

}

# Append the dictionary to the list

extracted_data.append(listing_data)

# Print or save the extracted information (you can modify this part as needed)

print(f"Listing {index + 1}:")

print(f"Title: {title}")

print(f"Price: {price}")

print(f"Bedrooms: {bedrooms}")

print(f"Area: {area}")

print(f"Description: {description}")

print(f"Tags: {tags}")

print(f"Image Tags: {', '.join(image_tags)}")

print("=" * 50)

In the code above, we checked if the HTTP response status code is 200, indicating a successful request.

If successful, it parses the HTML content of the Idealista webpage and extracts individual house listings by finding all HTML elements with the tag <article> and class 'item'. These represent individual house listings on the Idealista webpage.

The script then iterates through each listing and extracts its details.

The extracted information for each listing is stored in dictionaries, which are appended to a list called extracted_data.

The script prints the extracted information for each listing to the console, displaying details like:

- Title

- Price

- Bedrooms

- Area

- Description

- Tags

- Image tags

Step 5: Exporting Idealista Properties Into a JSON File

Now that you have extracted all the data you need, it is time to save them to a JSON file for easy usability.

Congratulations, you have successfully scraped data from Idealista, and you are free to use the data any way you want!

Wrapping Up

This tutorial presented a step-by-step approach to scraping data from Idealista, showing you how to:

- Collect the 50 most recent listings in Barcelona on Idealista

- Loop through all listings to collect information

- Send your requests through ScraperAPI to avoid getting banned

- Export all extracted data into a structured JSON file

As we conclude this insightful journey into the realm of Idealista scraping, we hope you’ve gained valuable insights into the power of Python and ScraperAPI in unlocking the treasure trove of information Idealista holds.

Stay curious, and remember, with great scraping capabilities come great responsibilities.

Until next time, happy scraping!

P.S. If you’d like to explore another approach to scraping Idealista and bypassing DataDome, check out this publication by The Web Scraping Club. It was created in collaboration between our own Leonardo Rodriguez and Pierluigi Vinciguerra, Co-Founder and CTO at Databoutique.

Frequently-Asked Questions

You can Scrape a handful of data from Idealista.com. Here is a list of all major data you can scrape:

- Property Prices

- Location Data

- Property Features

- Number of Bedrooms

- Number of Bathrooms

- Property Size (Square Footage)

- Property Type (e.g., apartment, house)

- Listing Descriptions

- Contact Information of Sellers/Agents

- Availability Status

- Images or Photos of the Property

- Floor Plans (if available)

- Additional Amenities or Facilities

- Property ID or Reference Number

- Date of Listing Publication

The possibilities with Idealista data are endless! Here are just a few examples:

- Market Research: Analyze property prices and trends in specific neighborhoods or cities and understand the real estate market’s demand and supply dynamics.

- Investment Decision-Making: Identify potential investment opportunities by studying property valuation and market conditions.

- Customized Alerts for Buyers and Investors: Set up personalized alerts for specific property criteria to ensure timely access to relevant listings.

- Research for Academia: Researchers can use scraped data for academic studies on real estate trends, thereby gaining insights into urban development, housing patterns, and demographic preferences.

- Competitive Analysis for Agents: Real estate agents can analyze competitor listings and pricing strategies and stay ahead by understanding market trends and consumer preferences.

Yes, Idealista blocks web scraping directly using techniques like IP blocking and Fingerprinting. To avoid your scrapers from breaking, we advise using a tool like ScraperAPI to bypass anti-bot mechanisms and automatically handle most of the complexities involved in web scraping.