Understanding Web Scraping in Python

Web scraping is one of the most useful skills to develop in 2024 and beyond. It allows you to collect data at a massive scale from online sources, which you can then use for whatever you need, like finding market trends or investment opportunities, building custom applications and dashboards, etc.

In this two-part mini-guide, we’ll explore the fundamentals of web scraping using Python and the Scrapy framework, dedicating this first entry to:

- What web scraping is

- What are the main tools we’ll work in web scraping

- The common challenges of web scraping

ScraperAPI is an all-in-one scraping solution designed to automate and overcome the major technical challenges of web scraping.

What is Web Scraping?

Web scraping is an automated method used to extract large amounts of public data from websites. It involves utilizing software tools to navigate web pages, retrieve the desired information, and store it for further analysis or manipulation.

Fundamentally, web scraping mimics the actions of a human user browsing a website but at a much faster and more efficient pace. This process typically involves accessing the HTML code of a webpage, parsing it to locate the relevant data, and then extracting and organizing that data into a structured format such as a spreadsheet or database.

Web scraping has become invaluable in various fields, including data science, market research, and business intelligence. By automating the process of data collection from online sources, organizations can gather vast amounts of information quickly and efficiently, enabling them to make informed decisions and gain competitive insights.

However, it’s important to note that the legality and ethical considerations surrounding web scraping vary depending on the website’s terms of service and the nature of the data being collected – to name a few. As such, practitioners of web scraping must exercise caution and adhere to best practices to ensure compliance with legal and ethical standards.

Data Collection with REST APIs

As software developers, we are used to dealing with well-structured or at least semi-structured data structures.

If data needs to be exchanged between applications, we use serialization formats like XML, JSON, or similar formats. This is particularly useful for exchanging data over HTTP.

A whole set of tooling is built around APIs (Application Programming Interfaces) built on HTTP – lots of companies provide access to their systems using REST (Representational State Transfer) APIs to their clients.

In Python, we can access REST APIs with built-in modules from the standard library.

import urllib.request

import json

def get_weather_with_urllib(API_KEY, city):

url = f"http://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}"

response = urllib.request.urlopen(url)

return json.loads(response.read())

The code above opens an HTTP connection to api.openweathermap.org, and then performs a GET operation to the API resource at /data/2.5/weather using the urllib.request module. The result is a file-like object that can be read and parsed with the json module.

While this looks pretty straightforward, dealing with more complex requests, status codes, different HTTP verbs, session handling, and other stuff can result in lengthy code.

A commonly used third-party library for managing HTTP requests in Python is Requests by Kenneth Reitz, a Python programmer well-known in the community for his various contributions and software packages.

You can install Request by using pip on your command line:

python -m pip install requests

With requests, our small script would look like this:

import requests

def get_weather_with_requests(API_KEY, city):

url = f"http://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}"

response = requests.get(url)

return response.json()

Note that the only difference here is that we use response.json instead of json.loads(response.read()) to parse the response. Bear with me. We will see more examples where the requests module shines.

REST APIs

As software developers, we are used to dealing with well-structured or at least semi-structured data structures. If data needs to be exchanged between applications, we use serialization formats like XML, JSON, or similar formats.

Luckily, many APIs are available that offer a wide range of different datasets.

In principle, we have already seen how to access REST APIs with Python in the introduction.

In this mini guide, the focus is on web scraping, meaning that – for the most part – we don’t have access to structured data. Instead, we have to extract the data from the HTML code of a website.

BeatifulSoup

Think, for example, of an ecommerce store with hundreds of products.

The data is often not accessible in machine-readable, structured formats* (* This is only half of the truth: HTML, for example, still is a machine-readable format). This could be because the website operator doesn’t see value in providing the data in a structured way – or it may be because the operator does not want the data to be crawled by robots. We will see in the last chapter how to deal with the latter case.

BeautifulSoup is a Python library that makes it easy to scrape information from web pages. It sits atop an HTML or XML parser, providing Pythonic idioms for iterating, searching, and modifying the parse tree.

It is frequently used in tandem with the requests library, which is used to download the web page content. beautifulsoup is then used to parse the HTML content.

Here is an example of how you can use beautifulsoup to scrape a website:

import requests

from bs4 import BeautifulSoup

url = "https://books.toscrape.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

book_titles = [a["title"] for a in soup.select("h3 a")]

Scrapy

Scrapy is a powerful and flexible web scraping framework written in Python. Think of it as a combination of Requests and BeautifulSoup but with a lot more features.

For example, Scrapy can handle pagination by following links and extracting data from JSON and XML. It also provides a way to store the extracted data in a database and to run scraping jobs in parallel.

With scrapy, you create a “Spider” class that defines how to scrape a website. The Spider class contains the logic to extract data from the website and how to follow links to other pages.

Before we dive into the details, here is a brief example of a spider class for scrapy:

import scrapy

class BooksSpider(scrapy.Spider):

name = "books"

start_urls = ["http://books.toscrape.com"]

def parse(self, response):

for article in response.css('article.product_pod'):

yield {

'title': article.css("h3 > a::attr(title)").extract_first(),

'price': article.css(".price_color::text").extract_first()

}

In this example, we define a Spider class called BooksSpider that scrapes the website http://books.toscrape.com. The parse method is called with the response of the start URL. It extracts the title and price of each book on the page and yields a dictionary with the extracted data.

To run the spider, you can save the code in a file called books_spider.py and run it with the scrapy runspider command:

scrapy runspider books_spider.py

In the next entry of this mini-guide, we will dive deeper into Scrapy and further explore its features.

Common Web Scraping Obstacles

This section covers common obstacles encountered when scraping websites. The topics covered are:

- Pagination

- JavaScript / Client-Side Rendering

- Bot Detection

- Session and Login

1. Dealing with Paginations

Pagination is a common obstacle when scraping websites. It divides a large amount of data into smaller, more manageable chunks by splitting it into pages, each containing a subset of the total data. Pagination is often used to improve the user experience by reducing the amount of data displayed on a single page and making navigating the content easier.

When scraping a website with pagination, you need to consider how to navigate through the pages to access all the data you want to scrape.

Here is how it could look like with beautifulsoup and requests:

<pre class="wp-block-syntaxhighlighter-code">

import requests

from bs4 import BeautifulSoup

url = "https://books.toscrape.com"

def get_entries(url):

entries = []

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

# Find all h3.a tags and extract the title attribute from the a tag

entries += [a["title"] for a in soup.select("h3 a")]

# Find the next page link

next_page = soup.select_one("li.next a")

if next_page:

next_page_url = requests.compat.urljoin(url, next_page["href"])

entries += get_entries(next_page_url)

return entries

get_entries(url)

Note that we have to manually take care of the URL join. This is because the href attribute of the a tag only contains the relative path to the next page. We have to join it with the base URL to get the full URL.

With Scrapy, we can use the response.follow method to follow links. This method takes a URL and a callback function as arguments. The callback function is called with the response of the new URL.

Here is what a Scrapy spider could look like:

import scrapy

class BooksSpider(scrapy.Spider):

name = "books"

allowed_domains = ["books.toscrape.com"]

start_urls = ["http://books.toscrape.com"]

def parse(self, response):

self.log(f"I just visited {response.url}")

for article in response.css('article.product_pod'):

yield {

'title': article.css("h3 > a::attr(title)").extract_first(),

'price': article.css(".price_color::text").extract_first()

}

next_page_url = response.css("li.next > a::attr(href)").get()

if next_page_url:

yield response.follow(url=next_page_url, callback=self.parse)

2. Client Side Rendering

When a website uses JavaScript to render its content, it can be difficult to scrape the data. The reason is that the content is not present in the HTML source code that is returned by the server. Instead, the content is generated by the JavaScript code that runs in the browser.

As your Python scripts don’t typically run JavaScript, you can’t simply use requests and beautifulsoup to scrape the data. You need to use a tool that can execute JavaScript, such as Playwright.

Playwright is a Node.js library that provides a high-level API for automating browsers. It allows you to interact with web pages in a way that simulates a real user interacting with a browser. This makes it ideal for scraping websites relying on JavaScript to render content.

We can utilize Playwright inside of our scrapy spiders. Here is an example of how you can use Playwright to scrape a website with client-side rendering:

import scrapy

from playwright.sync_api import sync_playwright

class QuotesSpider(scrapy.Spider):

name = 'quotes'

start_urls = ['http://quotes.toscrape.com/js']

def parse(self, response):

with sync_playwright() as p:

browser = p.chromium.launch()

page = browser.new_page()

page.goto(response.url)

quotes = page.query_selector_all('.quote')

for quote in quotes:

yield {

'text': quote.query_selector('.text').inner_text(),

'author': quote.query_selector('.author').inner_text(),

'tags': [tag.inner_text() for tag in quote.query_selector_all('.tag')]

}

browser.close()

3. Bot Detection

Some Websites may restrict access to bots. This can be done by checking the User-Agent header of the HTTP request. The User-Agent header is a string that identifies the client's browser and operating system. If the User-Agent header is not set or set to a value that is not recognized by the server, the server may block the request.

However, there are more sophisticated ways to detect bots. For example, the server may check a client's request rate. If a client sends too many requests in a short period of time, the server may block the client. Your IP address may also be blacklisted if you send too many requests.

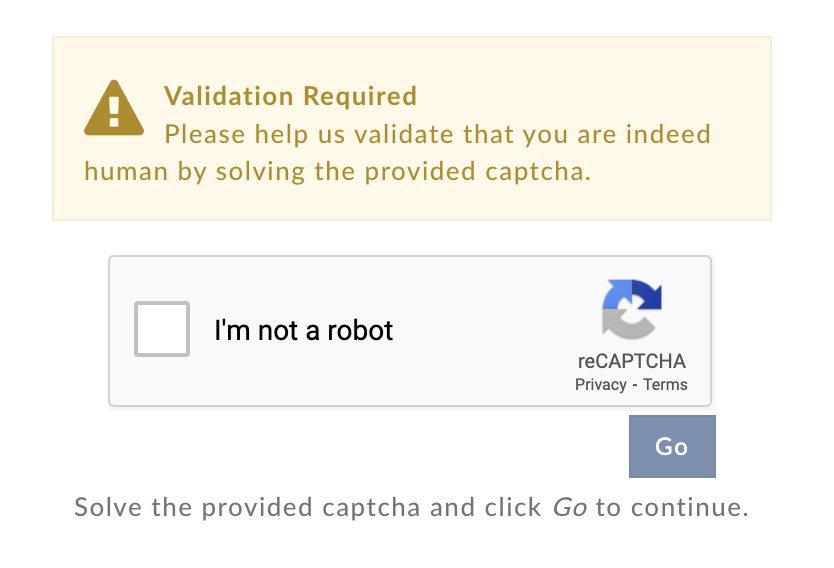

You might encounter a CAPTCHA when browsing the web from time to time.

These kinds of challenges are designed to distinguish between humans and bots. They are often used to prevent bots from scraping websites. If you encounter a CAPTCHA when scraping a website, you can use a CAPTCHA-solving service to bypass it.

Related: How to bypass CAPTCHAs while scraping Amazon.

4. Session and Login

Some websites require users to log in before they can access certain pages. This is a common obstacle when scraping websites. However, to avoid making this first entry larger than needed, we’ll cover how to handle session and login requirements when scraping websites in part two of our mini-guide.

Get the entire Python and Scrapy scraping guide in PDF format and read it at your own pace.