Are your web scraping scripts constantly blocked, even when using proper proxies? You might forget an important piece of the puzzle in web page scraping: custom HTTP headers.

In today’s web scraping article, we’ll dive deeper into HTTP headers and cookies, why they are important for scraping a website, and how we can grab and use them in our code to build a robust web scraper.

What are HTTP Headers?

According to MDN “ An HTTP header is a field of an HTTP request or response that passes additional context and metadata about the request or response” and consists of a case-sensitive name (like age, cache-control, Date, cookie, etc.) followed by a colon (:) and then its value.

In simpler terms:

- The user/client (usually a browser) sends a request containing request headers providing more details to the server.

- Then, the server responds with the data requested in the structure that fits the specifications contained in the request header.

For clarity, here’s the request header our browser is sending Prerender at the time of writing this article:

</p>

<pre>authority: in.hotjar.com

:method: POST

:path: /api/v2/client/sites/2829708/visit-data?sv=7

:scheme: https

accept: */*

accept-encoding: gzip, deflate, br

accept-language: en-US,en;q=0.9,it;q=0.8,es;q=0.7

content-length: 112

content-type: text/plain; charset=UTF-8

origin: https://prerender.io

referer: https://prerender.io/

sec-ch-ua: " Not A;Brand";v="99", "Chromium";v="101", "Microsoft Edge";v="101"

sec-ch-ua-mobile: ?0

sec-ch-ua-platform: "macOS"

sec-fetch-dest: empty

sec-fetch-mode: cors

sec-fetch-site: cross-site

user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.47</pre>

<p>What are Web Cookies?

Web cookies, also known as HTTP cookies or browser cookies, are a piece of data sent by a server (HTTP response header) to a user’s browser for later identification.

In a later request (HTTP header request), the browser will send the cookie back to the server, making it possible for the server to recognize the browser.

Websites and web applications use cookies for session management (like keeping you logged in), personalization (to keep settings and preferences), tracking, and, in some cases, security.

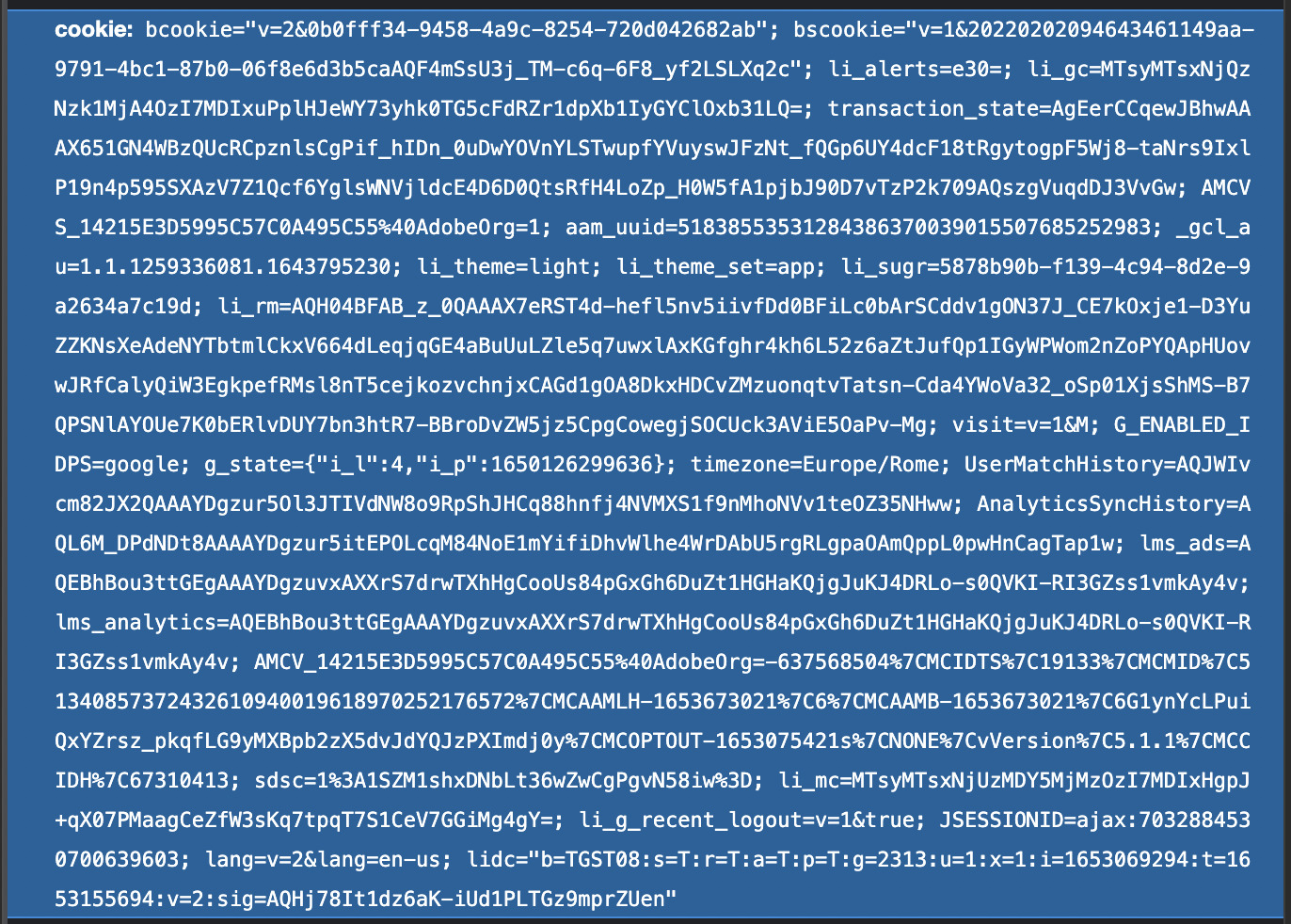

Let’s see what a cookie would look like in LinkedIn’s request header:

Why are HTTP Headers Important for Web Page Scraping?

A lot of website owners know their data may be scraped one way or another, so they use many different tools and strategies to identify web-scraping bots and block them from their sites. And there are many valid points for them to do so, as badly optimized bots can slow down or even break websites.

Note: You can avoid overwhelming web servers and decrease your chances of getting blocked by following these web scraping best practices.

However, one way that doesn’t get a lot of attention is the use of HTTP headers and cookies.

Because your browser/client sends HTTP headers in its requests, the server can use this information to detect false users, thus blocking them from accessing the website or providing false/bad information.

But the same can work the other way around.

By optimizing the headers sent through our scraping bot’s requests, we can mimic the behavior of an organic user, reducing our chances of getting blacklisted and, in some cases, improving the quality of the data we collect.

Most Common HTTP Headers for Web Scraping

There is a big list of HTTP headers we could learn and use in our requests, but in most cases, there are only a few that we really care about for web scraping:

1. User-Agent

This is probably the most important header for web scraping purposes as it identifies “the application type, operating system, software vendor or software version of the requesting software user agent,” making it the first check most servers will run.

For example, when sending a request using the Requests Python library, the user-agent field will show the following information – depending on your Python version:

</p>

<pre>user-agent: python-requests/2.22.0</pre>

<p>Which is easy to spot and block by the server.

Instead, we want our user-agent header to look more like the one shown in our first example:

</p>

<pre>user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.47</pre>

<p>2. Accept-Language

Although it’s not always necessary, it tells the server what language version of the data to provide.

When there’s a big discrepancy between each request from a language standpoint, it could tell the server there’s a bot involved.

However, technically speaking, “the server should always pay attention not to override an explicit user choice,” so if the URLs you’re scraping already have specific languages, it can still be perceived as an organic user.

Here’s how it appears in our example request:

</p>

<pre>accept-language: en-US,en;q=0.9,it;q=0.8,es;q=0.7</pre>

<p>3. Accept-Encoding

Just as its name implies, it tells the server which compression algorithm “can be used on the resource sent back,” saving up to 70% of the bandwidth needed for certain documents, thus reducing the stress our scripts put upon servers.

</p>

<pre>accept-encoding: gzip, deflate, br</pre>

<p>4. Referer

The Referer HTTP header tells the server the page which the user comes from. Although it’s used mostly for tracking, it can also help us mimic an organic user’s behavior by, for example, telling the server we’ve come from a search engine like Google.

</p>

<pre>referer: https://prerender.io/</pre>

<p>5. Cookie

We’ve already discussed what cookies are. However, we might haven’t stated clearly why cookies are important for web scraping.

Cookies allow servers to communicate using a small piece of data, but what happens when the server sends a cookie but then the browser doesn’t store it and sends it back in the next request? There’s the trick.

Cookies can also be used to identify if the request is coming from a real user or a bot.

Vice versa, we can use web cookies to mimic the organic behavior of a user when browsing a website by sending the cookies back to the server after every interaction.

By changing the cookie itself, we can also tell the server we’re a new user, making it easier for our scraping scripts to avoid getting blocked.

How to Grab HTTP Headers and Cookies Correctly

Before we can use headers in our web scraping code, we need to be able to grab them from somewhere.

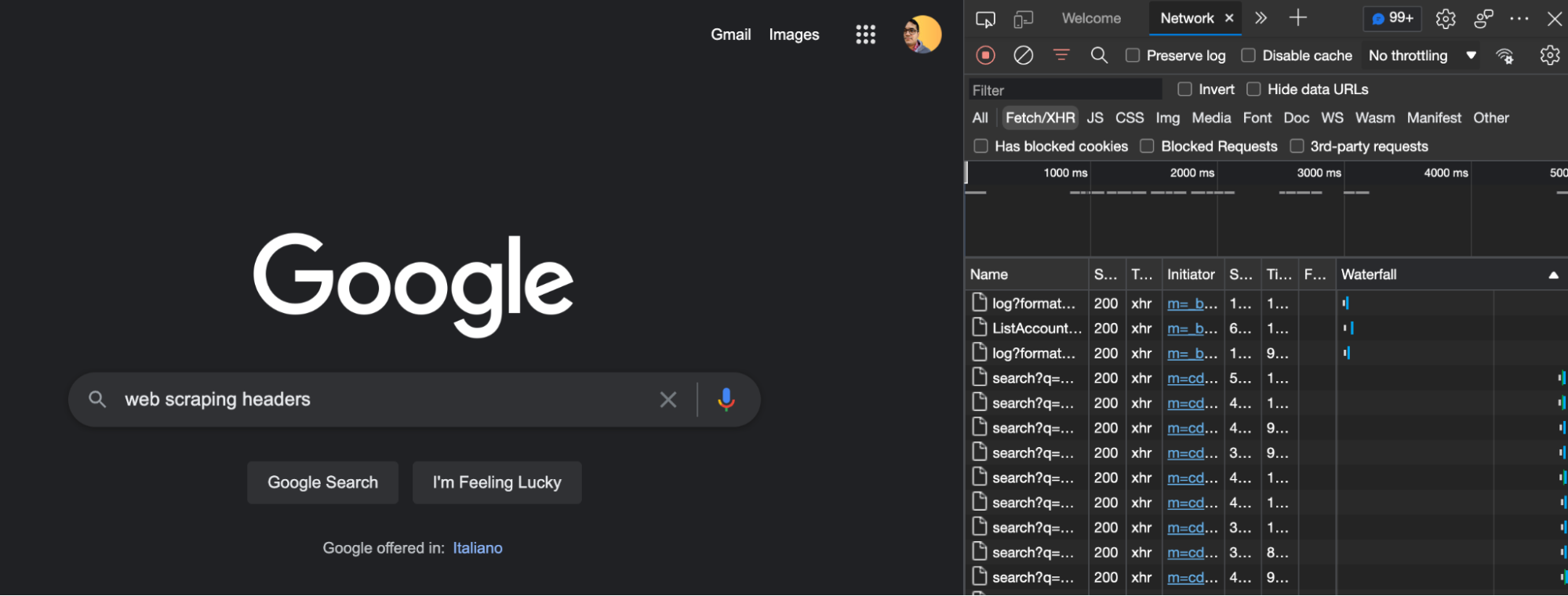

Let’s use our browser and navigate to the target website.

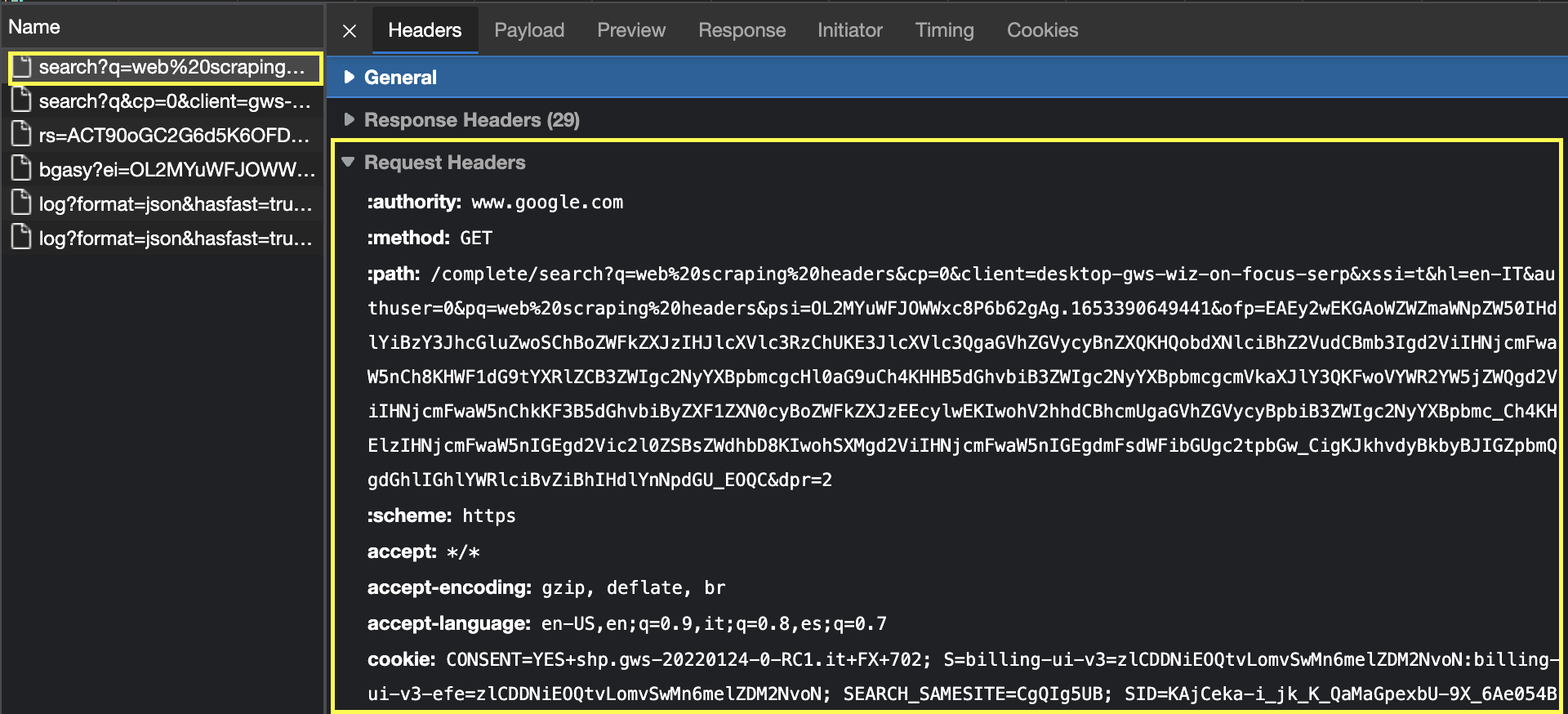

For example, go to google.com > right-click > inspect to open the developer tools.

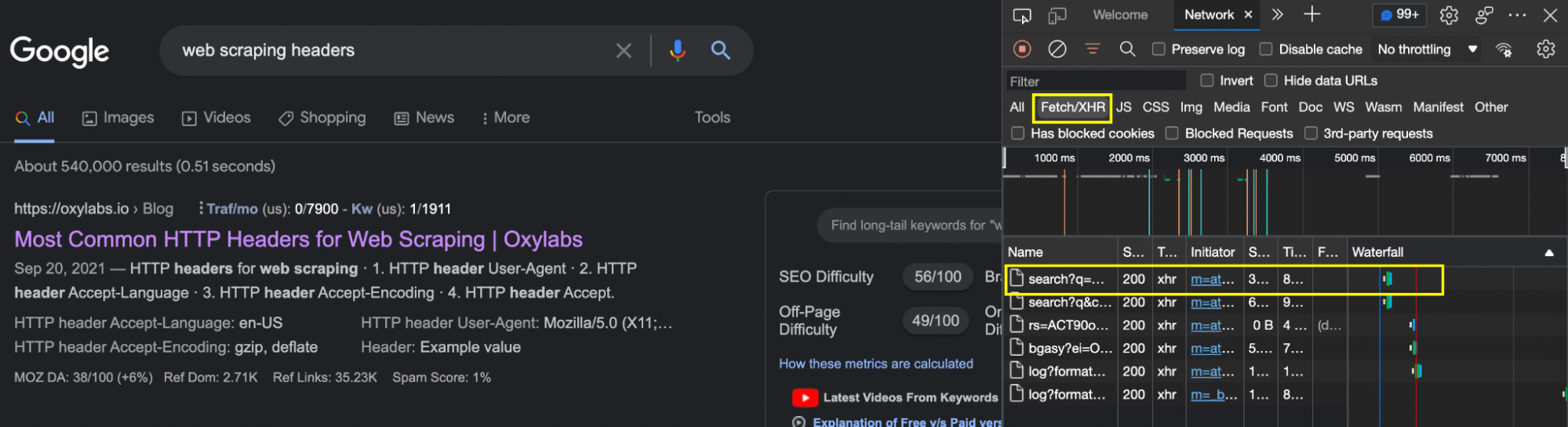

From there, we’ll navigate to the Network tab and, back on Google, search for the query “web scraping headers.”

As the page loads, we’ll see the network tab populate. Take a closer look at the Fetch/XHR tab, where we’ll be able to find the documents that are being fetched by the browser and, of course, the HTTP headers used in the request.

Although there’s no single standard name for the file we’re looking for, it’s usually a relevant name for what we’re trying to do on the page, or it’s the file that provides the data being rendered.

Clicking on the file, we’ll open, by default, the Headers tab, and by scrolling down, we’ll see the Request Headers section.

Now we can copy and paste the header fields and their value into our web scraping code.

How to Customize HTTP Headers To Avoid Getting Blocked in Web Scraping

How you use custom HTTP headers will depend on the programming language you’re using for your web scraping projects. Still, we’ll try to add as many examples as possible.

You’ll notice that each method is different, but they share the same logic, so no matter what codebase you’re using, it’ll be very easy to translate.

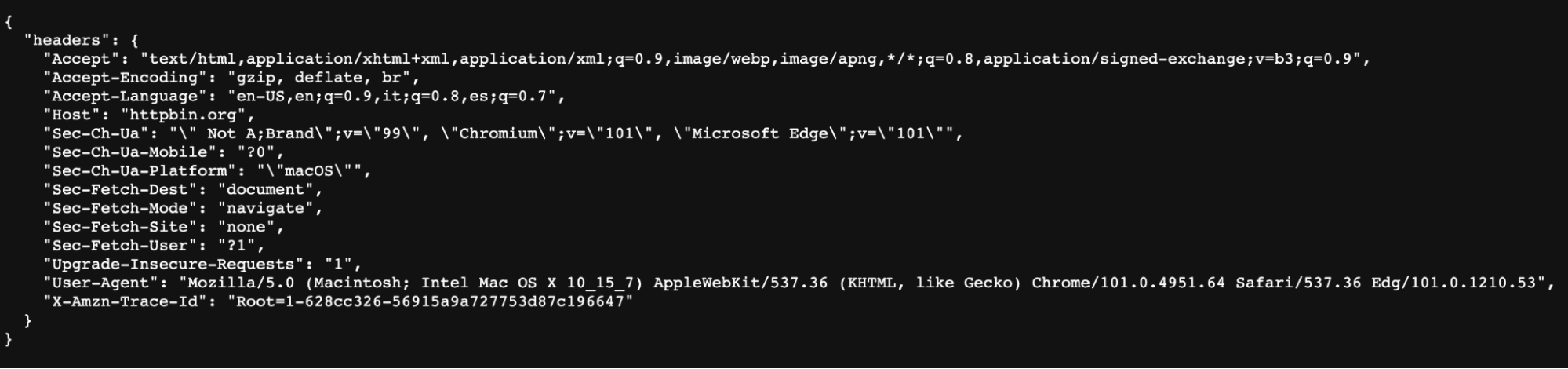

For all our examples, we’ll send our request to https://httpbin.org/headers, a website designed to show how the response and requests look from the server’s points of view.

Here’s what appears if we open the link above on our browser:

The website responds with the headers that our browser is sending, and we can now use the same headers when sending our request through code.

Using Custom HTTP Headers in Python For Data Extraction

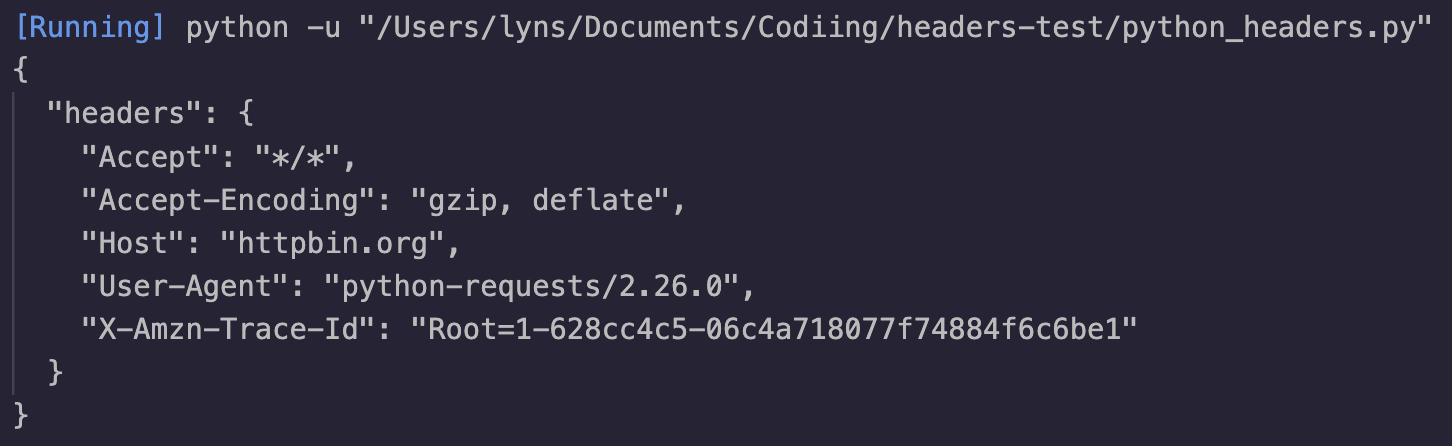

Before using our custom headers, let’s first send a test request to see what it returns:

</p>

<pre>import requests

url = 'https://httpbin.org/headers'

response = requests.get(url)

print(response.text)</pre>

<p>As you can see, the User-Agent being used is the default python-requests/2.26.0, making it super easy for a server to recognize our bot.

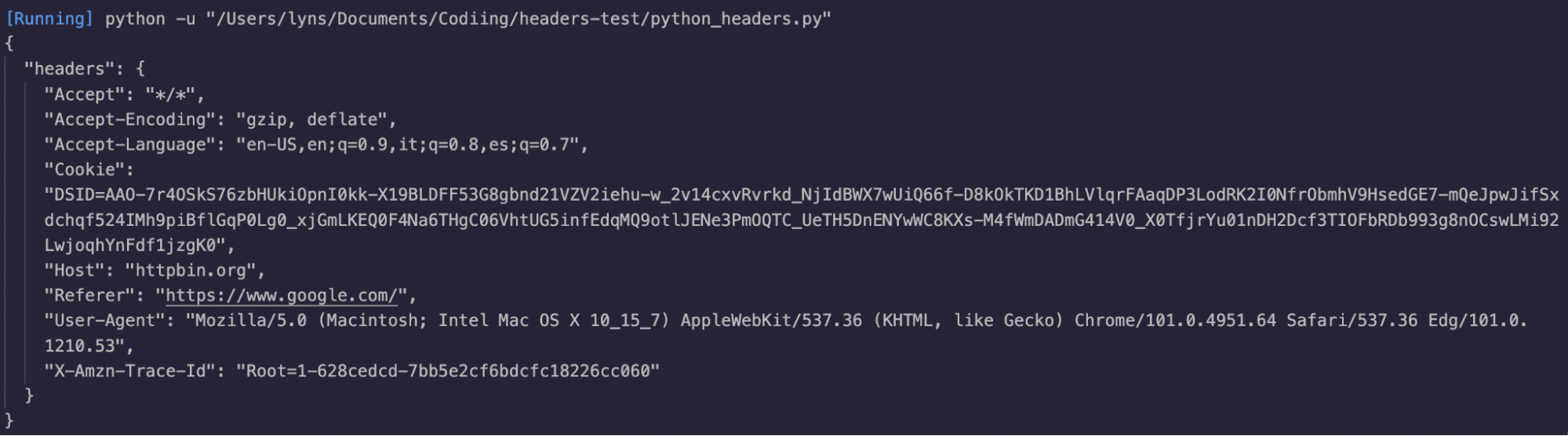

Knowing this, let’s move to the next step and add our custom headers to our request:

</p>

<pre>import requests

url = 'https://httpbin.org/headers'

headers = {

'accept': '*/*',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.53',

'Accept-Language': 'en-US,en;q=0.9,it;q=0.8,es;q=0.7',

'referer': 'https://www.google.com/',

'cookie': 'DSID=AAO-7r4OSkS76zbHUkiOpnI0kk-X19BLDFF53G8gbnd21VZV2iehu-w_2v14cxvRvrkd_NjIdBWX7wUiQ66f-D8kOkTKD1BhLVlqrFAaqDP3LodRK2I0NfrObmhV9HsedGE7-mQeJpwJifSxdchqf524IMh9piBflGqP0Lg0_xjGmLKEQ0F4Na6THgC06VhtUG5infEdqMQ9otlJENe3PmOQTC_UeTH5DnENYwWC8KXs-M4fWmDADmG414V0_X0TfjrYu01nDH2Dcf3TIOFbRDb993g8nOCswLMi92LwjoqhYnFdf1jzgK0'

}

response = requests.get(url, headers=headers)

print(response.text)</pre>

<p>- First, we create a dictionary with our headers. Some of them we got from the HTTPbin, but the cookies are from MDN documentation.

- Then, we added Google as the referer because, in most cases, users will come to the site by clicking a link from Google.

Here’s the result:

Theoretically, you could use any custom combination you’d like as values to your custom headers.

However, some websites won’t allow you access to them without sending a specific set of headers.

When using custom headers while scraping, it’s better to see what headers your browser already sends when navigating the target website and use those.

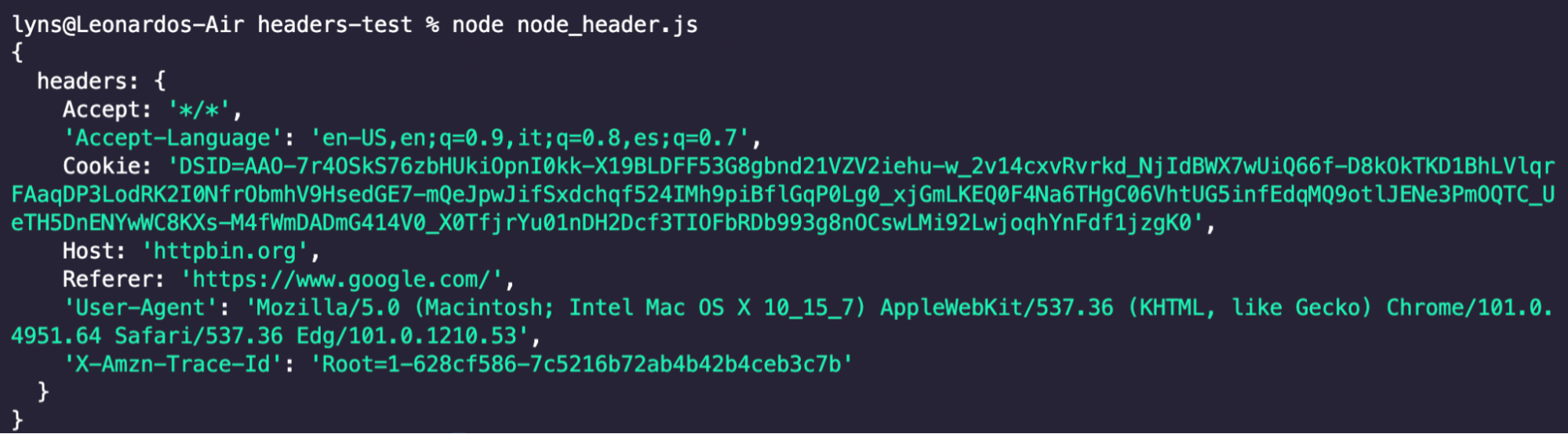

Using Custom HTTP Headers in Node.JS to Collect Data

We’re going to do the same thing but using the Node.JS Axios package to send our request:

</p>

<pre>const axios = require('axios').default;

const url = 'https://httpbin.org/headers';

const headers = {

'accept': '*/*',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.53',

'Accept-Language': 'en-US,en;q=0.9,it;q=0.8,es;q=0.7',

'referer': 'https://www.google.com/',

'cookie': 'DSID=AAO-7r4OSkS76zbHUkiOpnI0kk-X19BLDFF53G8gbnd21VZV2iehu-w_2v14cxvRvrkd_NjIdBWX7wUiQ66f-D8kOkTKD1BhLVlqrFAaqDP3LodRK2I0NfrObmhV9HsedGE7-mQeJpwJifSxdchqf524IMh9piBflGqP0Lg0_xjGmLKEQ0F4Na6THgC06VhtUG5infEdqMQ9otlJENe3PmOQTC_UeTH5DnENYwWC8KXs-M4fWmDADmG414V0_X0TfjrYu01nDH2Dcf3TIOFbRDb993g8nOCswLMi92LwjoqhYnFdf1jzgK0',

};

axios.get(url, Headers={headers})

.then((response) => {

console.log(response.data);

}, (error) => {

console.log(error);

});

</pre>

<p>Here’s the result:

Using Custom HTTP Headers in ScraperAPI

Pro Tip:

Please note that the need for custom headers when using ScraperAPI is a rare scenario as in most cases we take care of that for you.

Custom headers should only be sent when specific headers or cookies are required in order for the target site to return a successful response or ensure that specific elements are included.

Before sending custom headers please instead try sending these parameters (separately):

render=true,premium=trueandultra_premium=true.

A great feature of ScraperAPI is that it uses machine learning and years of statistical analysis to determine the best header combinations for each request we send.

However, in the unlikely scenario you need to use custom headers, ScraperAPI let’s you implement your own.

In our documentation, you’ll be able to find the full list of coding examples for Node.JS, PHP, Ruby web scraping, and Java. But for the sake of time, here’s a full Python example for using ScraperAPI with custom headers:

</p>

<pre>import requests

headers = {

'accept': '*/*',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.53',

'Accept-Language': 'en-US,en;q=0.9,it;q=0.8,es;q=0.7',

'referer': 'https://www.google.com/',

'cookie': 'DSID=AAO-7r4OSkS76zbHUkiOpnI0kk-X19BLDFF53G8gbnd21VZV2iehu-w_2v14cxvRvrkd_NjIdBWX7wUiQ66f-D8kOkTKD1BhLVlqrFAaqDP3LodRK2I0NfrObmhV9HsedGE7-mQeJpwJifSxdchqf524IMh9piBflGqP0Lg0_xjGmLKEQ0F4Na6THgC06VhtUG5infEdqMQ9otlJENe3PmOQTC_UeTH5DnENYwWC8KXs-M4fWmDADmG414V0_X0TfjrYu01nDH2Dcf3TIOFbRDb993g8nOCswLMi92LwjoqhYnFdf1jzgK0'

}

payload = {

'api_key': '51e43be283e4db2a5afb62660xxxxxxx',

'url': 'https://httpbin.org/headers',

'keep_headers': 'true',

}

response = requests.get('http://api.scraperapi.com', params=payload, headers=headers)

print(response.text)</pre>

<p>So using custom headers in Python is quite simple:

- We need to create our dictionary of headers like before and right after create our payload

- The first element we’ll add to the payload is our API Key which we can generate by creating a free ScraperAPI account

- Next, we’ll add our target URL – in this case https://httpbin.org/headers

- The last parameter will be keep_headers, set to true, to tell ScraperAPI to keep our custom headers

- Lastly, we’ll add all elements together right inside our requests.get() method

The combination of all of these will result in a URL like this:

</p>

<pre>http://api.scraperapi.com/?api_key=51e43be283e4db2a5afb62660xxxxxxx&url=http://httpbin.org/headers&keep_headers=true</pre>

<p>Increase Your Web Scraping Success by Correctly Handling HTTP Headers

Although HTTP headers and cookies can help you bypass certain sites’ anti-scraping mechanisms, they are not enough to collect data from hard-to-scrape websites or at an enterprise scale.

To simplify your infrastructure and never get blocked again, use ScraperAPI and enjoy a 99.99% success rate, in just one line of code.

Our API will choose and send you the right headers and IP combinations, freeing your mind from the task, so you can focus on what matters: data!

If you’re working with large projects (100k+ or millions of requests), you can use our Async Scraper to submit all your requests concurrently. This will allow ScraperAPI to work on each scraping job individually and return the data to your preferred webhook.

No coding experience? DataPipeline allows you to build and automate scraping projects in just minutes without writing a single line of code.

Try ScraperAPI for free and get access to all our tools with 5,000 free API credits.

Until next time, happy scraping!

FAQs About Leveraging HTTP Headers and Cookies for Web Data Extraction

1. How Can HTTP Headers and Cookies Help Me Avoid Being Blocked When Scraping Web Data?

HTTP headers can signal to the server whether a request is coming from a legitimate user or a web scraping bot. To ensure that the target website doesn’t identify your requests as malicious, you need to mimic the behavior of a real user. To do this, copy and paste the header fields of your HTTP headers into your web scraper code. This web scraping guide will show you how.

2. How to Correctly Use HTTP Headers and Cookies to Collect Web Data?

Different programming languages have different techniques for using custom HTTP headers. For example, if you use Python, you can follow these steps:

- Create a dictionary of custom headers.

- Set Google as the referrer to simulate real-world user traffic.

3. What Is an Example of Optimized User-Agent HTTP Headers for Web Scraping?

Here are examples of unoptimized and optimized user-agent HTTP headers for web scraping. For this, we’re using the Requests Python library.

- Unoptimized user-agent: “user-agent: python-requests/2.22.0”

- Optimized user-agent: user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36 Edg/101.0.1210.47