Scraping Google Shopping search results lets you gather a massive amount of product, pricing, and review data you can use for competitive analysis, dynamic pricing, and more.

In this tutorial, I’ll show you how to build a Google Shopping scraper using Python and Requests. No need for headless browsers or complicated workarounds. By the end, you’ll be able to scrape product results from hundreds of search terms and export all of this data into a CSV or JSON file.

Forget about building and maintaining parsers; let ScraperAPI collect, format, and deliver Google Shopping data in JSON format where you need it.

TL;DR: Google Shopping search scraper

For those in a hurry, here’s the finished code we’re covering in this tutorial:

import requests

import pandas as pd

keywords = [

'katana set',

'katana for kids',

'katana stand',

]

for keyword in keywords:

products = []

payload = {

'api_key': 'YOUR_API_KEY',

'query': keyword,

'country_code': 'it',

'tld': 'it',

'num': '100'

}

response = requests.get('https://api.scraperapi.com/structured/google/shopping', params=payload)

data = response.json()

organic_results = data['shopping_results']

print(len(organic_results))

for product in organic_results:

products.append({

'Name': product['title'],

'ID': product['docid'],

'Price': product['price'],

'URL': product['link'],

'Thumbnail': product['thumbnail']

})

df = pd.DataFrame(products)

df.to_csv(f'{keyword}.csv')

Before running the script, create a ScraperAPI account and add your API key to the api_key parameter within the payload.

Understanding Google Shopping

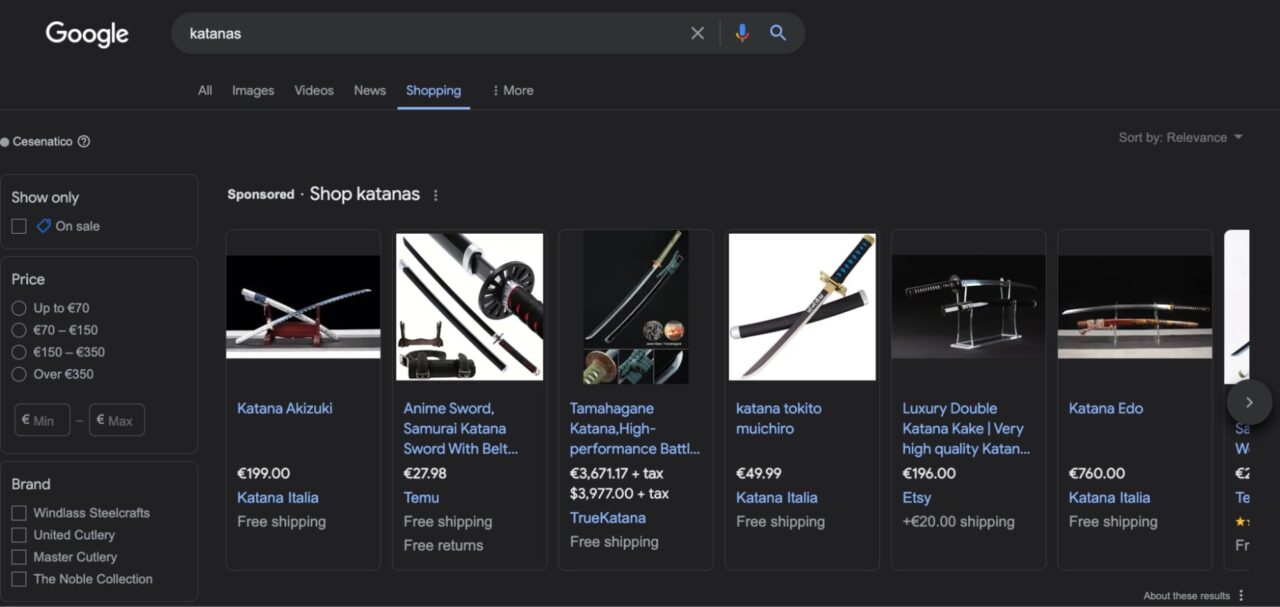

Google Shopping is a search engine that allows consumers to find, compare, and buy products from thousands of vendors in a single place.

It offers several features that make finding the right option easier, like sorting products by prices, brands, shipping, or whether or not the item is on sale.

After clicking on a product, Google Shopping will provide more context or send you directly to the seller’s product page, where you can buy it.

So, unlike ecommerce marketplaces, Google Shopping works more like a directory than an actual ecommerce site. This makes it a great source of product data, as you can collect thousands of product listings ranking for your target keywords.

The challenge of scraping Google Shopping

Google Shopping uses advanced anti-scraping mechanisms to prevent bots from gathering product data at any scale. Without the proper infrastructure, your scrapers will usually be blocked from the start.

Techniques like rate liming, browser fingerprinting, and CAPTCHAs are some of the most used to keep your scripts out of the site. However, Google Shopping also uses JavaScript to serve its dynamic result pages, making it even more computationally (and monetarily) expensive to run your scraping projects, as you’ll need to account for JS rendering.

To overcome these challenges, you can use a scraping solution like ScraperAPI’s Google Shopping endpoint, which allows you to scrape Google Shopping search results in JSON format with just an API call.

Note: Check out our tutorial if you’re interested in scraping Google search results.

Scraping Google Shopping results with Python

For the rest of the tutorial, I’ll show you how to use this SERP API to collect data from several search terms.

By the end, you’ll be able to gather details like:

- Product title

- Product ID

- Price

- Link / URL

- Thumbnail

And more.

Note: To follow this tutorial, sign up for a free ScraperAPI account to get a unique API key and 5,000 API credits.

Let’s begin!

Step 1: set up your Python project

To get started, make sure you have the Python 3+ version installed on your computer. You can check this from your terminal using the command python --version on both Mac and Windows.

Then, install the required dependencies using pip:

pip install requests pandas

We’ll use Requests to send the get() request to the endpoint and extract the data we need, while Pandas will help us format the data into a DataFrame and export it into a CSV.

Step 2: write your payload

To get data out of the endpoint, we’ll first need to set up a payload with the necessary context for the API.

Our Google Shopping endpoint accepts the following parameters:

| Parameter | Description |

api_key |

Your unique API key for authentication |

query |

The search term you want to scrape products for |

country_code |

This parameter determines the country’s IP. When set to us, your requests will only be sent from US-located proxies. |

tld |

Specify the top-level domain you want to target, like “.com” or “.co.uk”. |

num |

Sets the number of results you get per request. |

start |

Set the starting offset in the result list. For example, start=10 sets the first element in the result list to be the 10th search result |

Note: To learn more about these parameters, check our documentation.

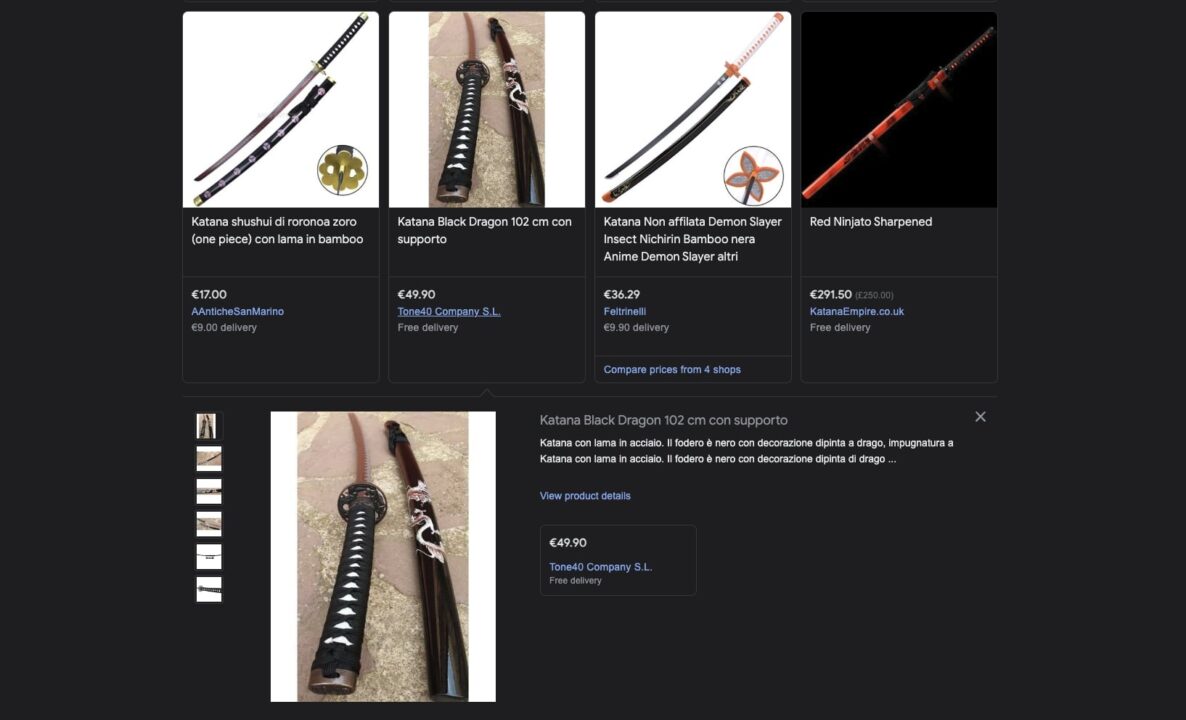

Let’s say we want to start selling katanas in Italy, so we decided to scrape the top 100 results for the query katana set to improve our listings and ensure our prices stay competitive.

Here’s my suggested payload:

payload = {

'api_key': 'YOUR_API_KEY',

'query': 'katana set',

'country_code': 'it',

'tld': 'it',

'num': '100'

}

Step 3: send a get request to ScraperAPI

To send a get() request to the Google Shopping endpoint:

- Import your dependencies at the top of your Python script before the payload.

import requests

import pandas as pd

- Then send your get() request to

https://api.scraperapi.com/structured/google/shoppingendpoint, with yourpayloadasparams.

response = requests.get('https://api.scraperapi.com/structured/google/shopping', params=payload)

print(response.content)

For testing, we’re printing the JSON response returned by the API – for this example I set num to 1:

"shopping_results":[

{

"position":1,

"docid":"766387587079352848",

"link":"https://www.google.it/url?url=https://www.armiantichesanmarino.eu/set-tre-katana-di-roronoa-zoro-one-piece-complete-di-fondina.html&rct=j&q=&esrc=s&opi=95576897&sa=U&ved=0ahUKEwiKztDw0bKFAxWqVqQEHUVeCQwQ2SkIwAE&usg=AOvVaw2UtBBfaC9Gfxh_WwD9eB_Q",

"title":"Set tre katana di roronoa zoro (one piece) complete di fondina",

"source":"AAnticheSanMarino",

"price":"89,90\\xc2\\xa0\\xe2\\x82\\xac",

"extracted_price":89.9,

"thumbnail":"https://encrypted-tbn0.gstatic.com/shopping?q=tbn:ANd9GcTNZtKMoGht9qQ-AsOQQnf-ULYCPLrGvY5MKBPVUwQ-JsVIC88q792L55uooEvWAuhFjXDBD84H7Hp8gOi7TIAoJugxFUz6AMmoBSrjF5V4&usqp=CAE",

"delivery_options":"Consegna a 9,00\\xc2\\xa0\\xe2\\x82\\xac",

"delivery_options_extracted_price":9

}

]

Step 4: extract specific data points from the JSON response

The endpoint provides many details about the products. However, in our case, we only want to collect the product title, product ID, price, product URL, and thumbnail URL.

Luckily, the endpoint returns all this information in a JSON response with predictable key-value pairs.

We can use these key-value pairs to extract specific data points from the response:

response = requests.get('https://api.scraperapi.com/structured/google/shopping', params=payload)

data = response.json()

organic_results = data['shopping_results']

for product in organic_results:

product_title = product['title']

print(product_title)

After accessing shopping_results, which contains the organic results for the specific query, we iterate through the results and get the title key’s value, which is the product name.

Set tre katana di roronoa zoro (one piece) complete di fondina

Then, we do the same thing for the rest of the elements:

for product in organic_results:

product_title = product['title']

product_id = product['docid']

product_price = product['price']

product_url = product['link']

product_image = product['thumbnail']

Step 5: export Google Shopping results

Save extracted data to a JSON file

Before I show you how to send the data to a CSV file, there’s a quick way we can just export all of this data out of the terminal by saving it into a JSON file.

import requests

import json

payload = {

'api_key': 'b32f530be73cba3064c90d03f67c8e89',

'query': 'katana set',

'country_code': 'it',

'tld': 'it',

'num': '100'

}

response = requests.get('https://api.scraperapi.com/structured/google/shopping', params=payload)

data = response.json()

with open('google-shopping-results', 'w') as f:

json.dump(data, f)

In this scenario, we don’t need to do much because the response is already JSON data. All we need to do is import JSON into our project and then dump() the data into a file.

Save extracted data into a CSV file

To export our JSON data as a CSV file, create an empty list before the payload.

products = []

Then, because we already have the logic to pick specific data points from the JSON response, we append the product information extracted to this empty list using the append() method.

products.append({

'Name': product_title,

'ID': product_id,

'Price': product_price,

'URL': product_url,

'Thumbnail': product_image

})

This snippet goes inside your for loop. This way, your script will append each product detail as a single item after every iteration.

To test it out, let’s print products and see what it returns.

{

"Name":"Set di 3 katane rosse con supporto",

"ID":"14209320763374218382",

"Price":"85,50\\xa0€",

"URL":"https://www.google.it/url?url=https://www.desenfunda.com/it/set-di-3-katane-rosse-con-supporto-ma-32583-230996.html&rct=j&q=&esrc=s&opi=95576897&sa=U&ved=0ahUKEwjo4-SRh7OFAxVfVqQEHaxtDQAQ2SkIxww&usg=AOvVaw0ktLGrn1luMDxTqbWCa0WG",

"Thumbnail":"https://encrypted-tbn3.gstatic.com/shopping?q=tbn:ANd9GcRyyVQ8ae4Fxo0Nw5wwFI2xYMo6dIj_sWYfUqc1vbR8MuS-mybf4MZSb3LgprZrSKkhwP1eU97XYpVO99E9YF3VwaASfIeMtjldEd2Bf2tS5ghm3OWXWtDvHQ&usqp=CAE"

}, MORE DATA

Pro Tip

You can simplify your code by appending the information as it’s getting scraped:

for product in organic_results: products.append({ 'Name': product['title'], 'ID': product['docid'], 'Price': product['price'], 'URL': product['link'], 'Thumbnail': product['thumbnail'] })

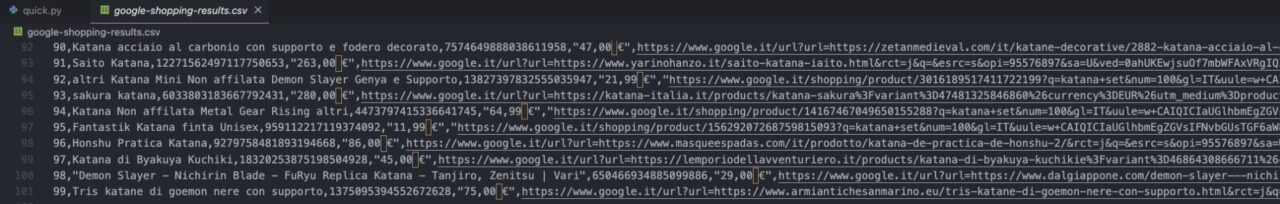

Now that we know it’s working and the data is inside our products list, it’s time to use Pandas to create a DataFrame and save the information as a CSV file.

df = pd.DataFrame(products)

df.to_csv('google-shopping-results.csv', index=False)

In your folder, you’ll find the saved CSV:

Note: Remember, the index starts at 0, so 99 is the last result.

Congratulations, you just scraped Google Shopping search results!

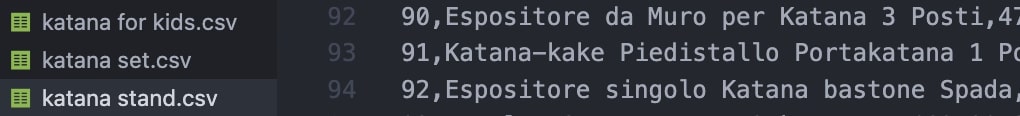

Scraping Multiple Google Shopping Search Terms

You’ll want to keep track of several search terms for most projects. To do so, let’s tweak the code we’ve already written a bit.

For starters, let’s add a list of terms we’ll iterate over.

keywords = [

'katana set',

'katana for kids',

'katana stand'

]

Next, we’ll create a new for loop and move all our code inside, adding our variable to the payload.

for keyword in keywords:

payload = {

'api_key': 'YOUR_API_KEY',

'query': keyword,

'country_code': 'it',

'tld': 'it',

'num': '100'

}

From there, the code stays the same. However, we don’t want to get all results mixed, so instead of adding everything to the same file, let’s tell our script to create a new file for each keyword:

- Move the empty list inside our bigger

forloop – you can add it before thepayload - Then, we’ll use the keyword variable to create the file name dynamically

products = []

#HERE'S THE REST OF THE CODE

df = pd.DataFrame(products)

df.to_csv(f'{keyword}.csv')

For every search term in the keywords list, our scraper will create a new products empty list, extract the top 100 product results, append all of this data to products, and then export the list to a CSV file.

Add as many search terms as you need to the keywords variable to scale this project, and let ScraperAPI do the rest.

Let our team of experts build a custom plan that fits the volume your business needs.

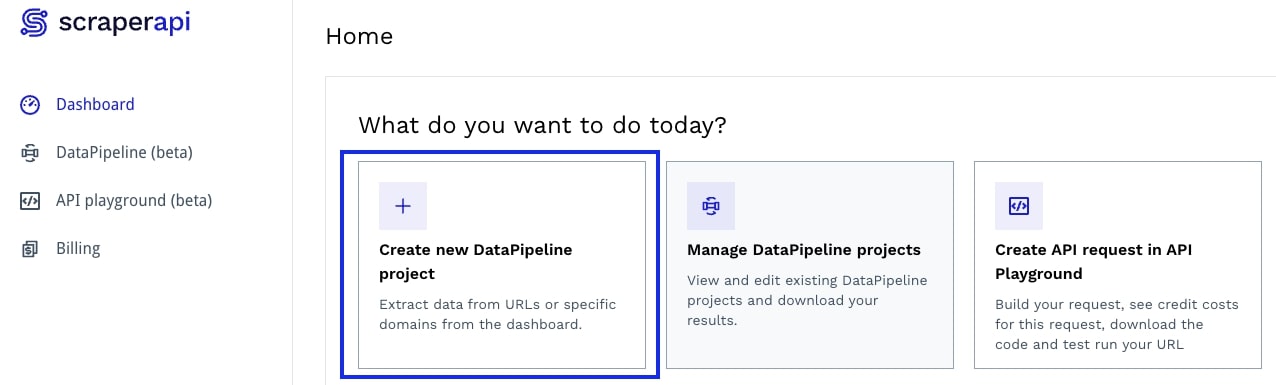

Collecting Google Shopping data with no-code

ScraperAPI also offers a hosted scraper designed to build entire scraping projects with a visual interface called DataPipeline. With it, we can automate the entire scraping process without writing or maintaining any code.

Let me show you how to get started.

Note: Check DataPipelines documentation to learn the ins and outs of the tool.

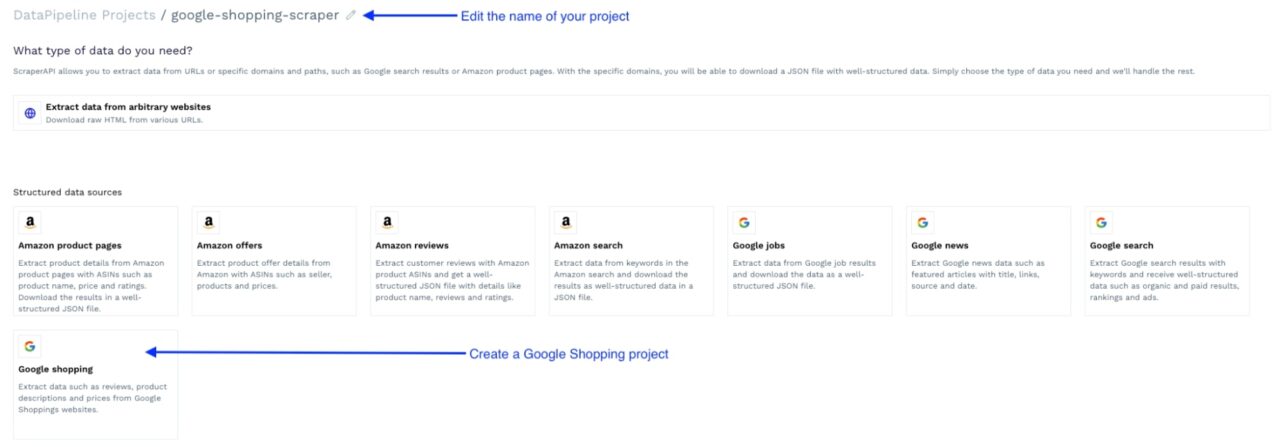

Create a new Google Shopping project

Log in to your ScraperAPI account to access your dashboard and click on the Create a new DataPipeline project option at the top of the page.

It’ll open a new window where you can edit the name of your project and choose between several ready-to-use templates. Click on Google Shopping to get started.

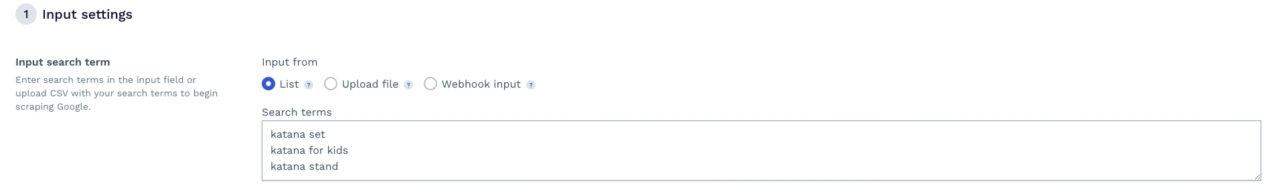

Set up your scraping project

The first step is uploading a list of search terms for which to scrape data. You can monitor/scrape up to 10,000 search terms per project.

You can add the terms directly to the text box (one by line), upload a list as a text file, or add a Webhook link for a more dynamic list of terms.

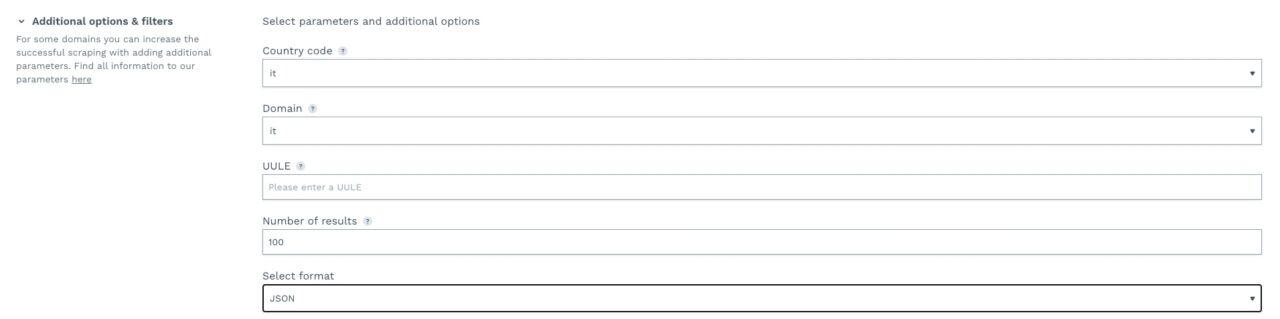

You can further customize your project by enabling different parameters to make the returned data more accurate and fit your needs.

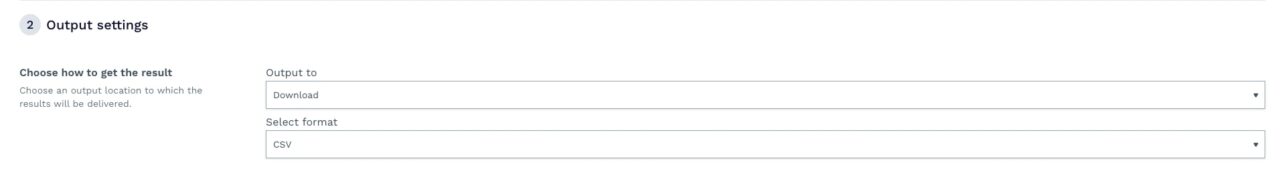

Next, choose your preferred data delivery option, including downloading or sending the data via Webhook and receiving it in JSON or CSV format.

Note: You can download the results regardless of whether you choose Webhook as a delivery option.

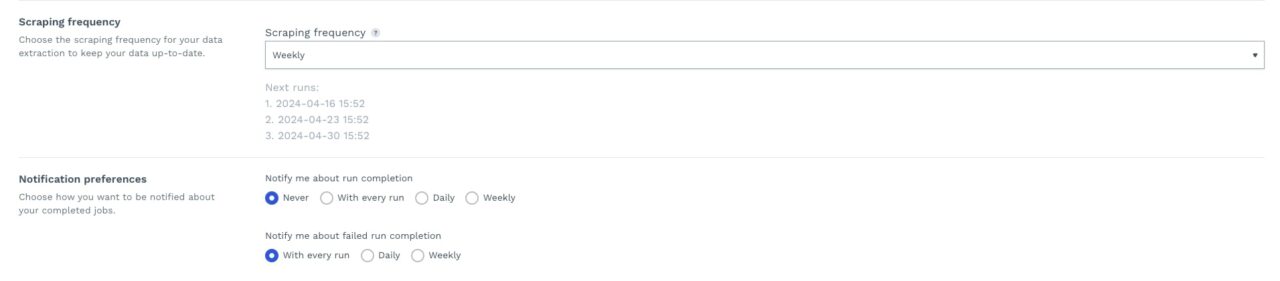

Lastly, let’s specify the scraping frequency (it can be a one-time scraping job or even set custom intervals using Cron) and our notification preferences.

Run your Google Shopping scraper

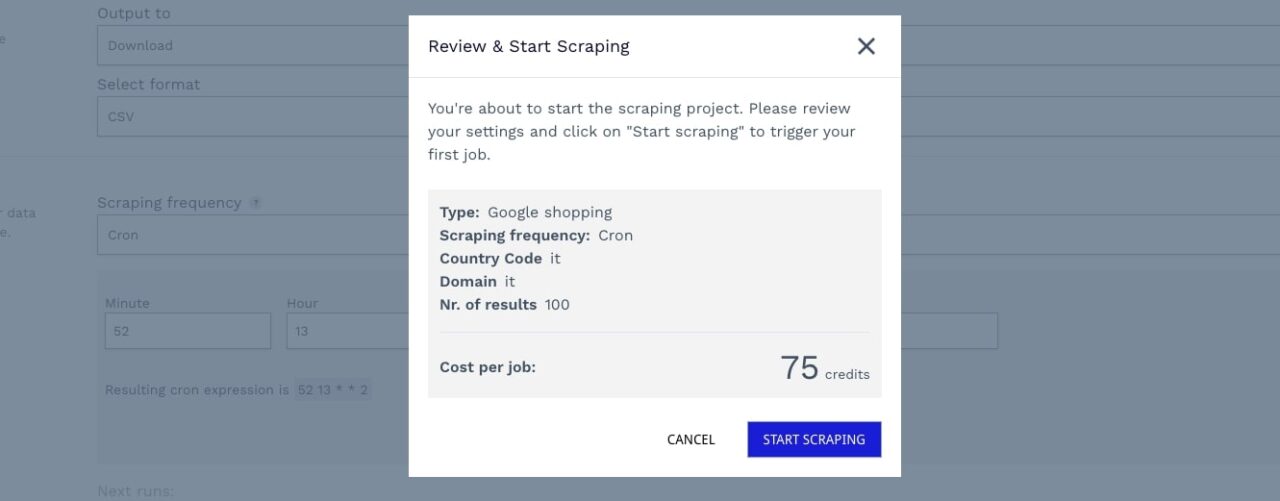

Once everything is set up, click on the review and start scraping at the bottom of the page.

It’ll provide an overview of the project and an accurate calculation of the number of credits spent per run, keeping pricing as transparent as possible.

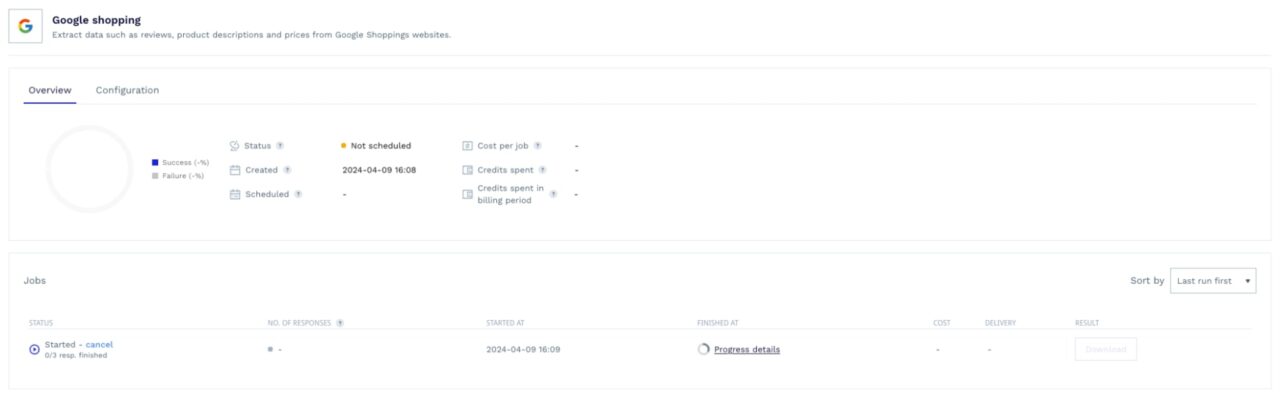

After clicking on start scraping, the tool will take you to the project’s dashboard, where you can monitor performance, cancel running jobs, and take a look at your configurations.

You’ll also be able to download the results after every run, so as more jobs are completed, you can always come back and download the data from previous ones.

That’s it. You are now ready to scrape thousands of Google Shopping results!

Wrapping up

I hope that by now, you feel ready to start your own project and get the data you need to launch that new product, create that app, or run a competitor analysis relying on your own data.

To summarize, using scraping Google Shopping with ScraperAPI is as simple as:

- Importing Requests and Pandas to your Python project

- Send a get request to

https://api.scraperapi.com/structured/google/shoppingendpoint, alongside apayload(containing your data requirements) asparams - Pick data you’re interested in using the JSON key-value pair from the response

- Create a DataFrame and export the data to a CSV or JSON file

That said, I suggest using DataPipeline to automate the entire process using the Google Shopping template. This will save you time and money in development costs and let you focus on gaining insights from the data.

Until next time, happy scraping!

Frequently-Asked Questions

By scraping Google Shopping, you can access a wide range of sellers and products, including pricing data, product details, sellers’ data like inventories and offerings, and customers’ reviews and ratings.

Because this pool of products is so diverse, you can use this information for competitive analysis, create dynamic pricing strategies, and optimize your SEO. You can also analyze customer sentiment toward specific products and brands, helping you build winning business strategies and launch successful products.

Learn how ecommerce companies can use web data to improve sales and profitability.

Google Shopping uses advanced anti-scraping mechanisms to block bots and scripts, making it hard to collect data from its platform programmatically.

The site also uses JavaScript to inject product listings into the page, adding an extra layer of complexity as you must render the page before seeing any data.

To access this data, you can use a scraping solution like ScraperAPI’s Google Shopping endpoint. This endpoint allows you to collect Google Shopping search results in JSON format consistently and at scale.

As of April 2024, scraping publicly available data is entirely legal and will not present any potential risk to you or your business. You don’t need to log into an account or bypass any paywall to access Google Shopping results, making it 100% legal to collect.